Powell's method

Powell's method, strictly Powell's conjugate direction method, is an algorithm proposed by Michael J. D. Powell for finding a local minimum of a function. The function need not be differentiable, and no derivatives are taken.

The function must be a real-valued function of a fixed number of real-valued inputs. The caller passes in the initial point. The caller also passes in a set of initial search vectors. Typically N search vectors (say[math]\displaystyle{ \{s_1, \dots, s_N\} }[/math]) are passed in which are simply the normals aligned to each axis.[1]

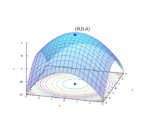

The method minimises the function by a bi-directional search along each search vector, in turn. The bi-directional line search along each search vector can be done by Golden-section search or Brent's method. Let the minima found during each bi-directional line search be [math]\displaystyle{ \{ x_0 + \alpha_1s_1, {x}_0 + \sum_{i=1}^{2}\alpha_i{s}_i, \dots, {x}_0 +\sum_{i=1}^{N}\alpha_i{s}_i \} }[/math], where [math]\displaystyle{ {x}_0 }[/math] is the initial starting point and [math]\displaystyle{ \alpha_i }[/math] is the scalar determined during bi-directional search along [math]\displaystyle{ {s}_i }[/math]. The new position ([math]\displaystyle{ x_1 }[/math]) can then be expressed as a linear combination of the search vectors i.e. [math]\displaystyle{ x_1 = x_0 + \sum_{i=1}^N \alpha_i s_i }[/math]. The new displacement vector ([math]\displaystyle{ \sum_{i=1}^N \alpha_i s_i }[/math]) becomes a new search vector, and is added to the end of the search vector list. Meanwhile, the search vector which contributed most to the new direction, i.e. the one which was most successful ([math]\displaystyle{ i_{d} = \arg \max_{i=1}^N |\alpha_i| \|s_i\| }[/math]), is deleted from the search vector list. The new set of N search vectors is [math]\displaystyle{ \{ s_1, \dots, s_{i_d - 1}, s_{i_d + 1}, \dots, s_N, \sum_{i=1}^N \alpha_i s_i \} }[/math]. The algorithm iterates an arbitrary number of times until no significant improvement is made.[1]

The method is useful for calculating the local minimum of a continuous but complex function, especially one without an underlying mathematical definition, because it is not necessary to take derivatives. The basic algorithm is simple; the complexity is in the linear searches along the search vectors, which can be achieved via Brent's method.

References

- Powell, M. J. D. (1964). "An efficient method for finding the minimum of a function of several variables without calculating derivatives". Computer Journal 7 (2): 155–162. doi:10.1093/comjnl/7.2.155.

- Press, WH; Teukolsky, SA; Vetterling, WT; Flannery, BP (2007). "Section 10.7. Direction Set (Powell's) Methods in Multidimensions". Numerical Recipes: The Art of Scientific Computing (3rd ed.). New York: Cambridge University Press. ISBN 978-0-521-88068-8. http://apps.nrbook.com/empanel/index.html#pg=509.

- Brent, Richard P. (1973). "Section 7.3: Powell's algorithm". Algorithms for minimization without derivatives. Englewood Cliffs, N.J.: Prentice-Hall. ISBN 0-486-41998-3. https://books.google.com/books?id=6Ay2biHG-GEC&lpg=PP1.

|