Poisson point process

|

Probability density function  | |||

| Mean | [math]\displaystyle{ a_{0, t} = \int_{0}^{t} \lambda(\alpha) d\alpha }[/math] | ||

|---|---|---|---|

| Variance |

[math]\displaystyle{ a_{0, t} + (a_{0, t})^2 - (a_{0, t})^2 = a_{0, t} }[/math] | ||

In probability, statistics and related fields, a Poisson point process is a type of random mathematical object that consists of points randomly located on a mathematical space with the essential feature that the points occur independently of one another.[1] The Poisson point process is also called a Poisson random measure, Poisson random point field or Poisson point field. When the process is defined on the real line, it is often called simply the Poisson process.

This point process has convenient mathematical properties,[2] which has led to its being frequently defined in Euclidean space and used as a mathematical model for seemingly random processes in numerous disciplines such as astronomy,[3] biology,[4] ecology,[5] geology,[6] seismology,[7] physics,[8] economics,[9] image processing,[10][11] and telecommunications.[12][13]

The process is named after French mathematician Siméon Denis Poisson despite Poisson's never having studied the process. Its name derives from the fact that if a collection of random points in some space forms a Poisson process, then the number of points in a region of finite size is a random variable with a Poisson distribution. The process was discovered independently and repeatedly in several settings, including experiments on radioactive decay, telephone call arrivals and insurance mathematics.[14][15]

The Poisson point process is often defined on the real line, where it can be considered as a stochastic process. In this setting, it is used, for example, in queueing theory[16] to model random events, such as the arrival of customers at a store, phone calls at an exchange or occurrence of earthquakes, distributed in time. In the plane, the point process, also known as a spatial Poisson process,[17] can represent the locations of scattered objects such as transmitters in a wireless network,[12][18][19][20] particles colliding into a detector, or trees in a forest.[21] In this setting, the process is often used in mathematical models and in the related fields of spatial point processes,[22] stochastic geometry,[1] spatial statistics[22][23] and continuum percolation theory.[24] The Poisson point process can be defined on more abstract spaces. Beyond applications, the Poisson point process is an object of mathematical study in its own right.[2] In all settings, the Poisson point process has the property that each point is stochastically independent to all the other points in the process, which is why it is sometimes called a purely or completely random process.[25] Modeling a system as a Poisson Process is insufficient when the point-to-point interactions are too strong (i.e. the points are not stochastically independent). Such a system may be better modeled with a different point process.[26]

The point process depends on a single mathematical object, which, depending on the context, may be a constant, a locally integrable function or, in more general settings, a Radon measure.[27] In the first case, the constant, known as the rate or intensity, is the average density of the points in the Poisson process located in some region of space. The resulting point process is called a homogeneous or stationary Poisson point process.[28] In the second case, the point process is called an inhomogeneous or nonhomogeneous Poisson point process, and the average density of points depend on the location of the underlying space of the Poisson point process.[29] The word point is often omitted,[2] but there are other Poisson processes of objects, which, instead of points, consist of more complicated mathematical objects such as lines and polygons, and such processes can be based on the Poisson point process.[30] Both the homogeneous and nonhomogeneous Poisson point processes are particular cases of the generalized renewal process.

Overview of definitions

Depending on the setting, the process has several equivalent definitions[31] as well as definitions of varying generality owing to its many applications and characterizations.[32] The Poisson point process can be defined, studied and used in one dimension, for example, on the real line, where it can be interpreted as a counting process or part of a queueing model;[33][34] in higher dimensions such as the plane where it plays a role in stochastic geometry[1] and spatial statistics;[35] or on more general mathematical spaces.[36] Consequently, the notation, terminology and level of mathematical rigour used to define and study the Poisson point process and points processes in general vary according to the context.[37]

Despite all this, the Poisson point process has two key properties—the Poisson property and the independence property— that play an essential role in all settings where the Poisson point process is used.[27][38] The two properties are not logically independent; indeed, the Poisson distribution of point counts implies the independence property [lower-alpha 1], while in the converse direction the assumptions that: (i) the point process is simple, (ii) has no fixed atoms, and (iii) is a.s. boundedly finite are required. [39]

Poisson distribution of point counts

A Poisson point process is characterized via the Poisson distribution. The Poisson distribution is the probability distribution of a random variable [math]\displaystyle{ N }[/math] (called a Poisson random variable) such that the probability that [math]\displaystyle{ \textstyle N }[/math] equals [math]\displaystyle{ \textstyle n }[/math] is given by:

- [math]\displaystyle{ \Pr \{N=n\}=\frac{\Lambda^n}{n!} e^{-\Lambda} }[/math]

where [math]\displaystyle{ n! }[/math] denotes factorial and the parameter [math]\displaystyle{ \Lambda }[/math] determines the shape of the distribution. (In fact, [math]\displaystyle{ \Lambda }[/math] equals the expected value of [math]\displaystyle{ N }[/math].)

By definition, a Poisson point process has the property that the number of points in a bounded region of the process's underlying space is a Poisson-distributed random variable.[38]

Complete independence

Consider a collection of disjoint and bounded subregions of the underlying space. By definition, the number of points of a Poisson point process in each bounded subregion will be completely independent of all the others.

This property is known under several names such as complete randomness, complete independence,[40] or independent scattering[41][42] and is common to all Poisson point processes. In other words, there is a lack of interaction between different regions and the points in general,[43] which motivates the Poisson process being sometimes called a purely or completely random process.[40]

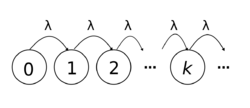

Homogeneous Poisson point process

If a Poisson point process has a parameter of the form [math]\displaystyle{ \Lambda=\nu \lambda }[/math], where [math]\displaystyle{ \nu }[/math] is Lebesgue measure (that is, it assigns length, area, or volume to sets) and [math]\displaystyle{ \lambda }[/math] is a constant, then the point process is called a homogeneous or stationary Poisson point process. The parameter, called rate or intensity, is related to the expected (or average) number of Poisson points existing in some bounded region,[44][45] where rate is usually used when the underlying space has one dimension.[44] The parameter [math]\displaystyle{ \lambda }[/math] can be interpreted as the average number of points per some unit of extent such as length, area, volume, or time, depending on the underlying mathematical space, and it is also called the mean density or mean rate;[46] see Terminology.

Interpreted as a counting process

The homogeneous Poisson point process, when considered on the positive half-line, can be defined as a counting process, a type of stochastic process, which can be denoted as [math]\displaystyle{ \{N(t), t\geq 0\} }[/math].[31][34] A counting process represents the total number of occurrences or events that have happened up to and including time [math]\displaystyle{ t }[/math]. A counting process is a homogeneous Poisson counting process with rate [math]\displaystyle{ \lambda\gt 0 }[/math] if it has the following three properties:[31][34]

- [math]\displaystyle{ N(0)=0; }[/math]

- has independent increments; and

- the number of events (or points) in any interval of length [math]\displaystyle{ t }[/math] is a Poisson random variable with parameter (or mean) [math]\displaystyle{ \lambda t }[/math].

The last property implies:

- [math]\displaystyle{ \operatorname E[N(t)]=\lambda t. }[/math]

In other words, the probability of the random variable [math]\displaystyle{ N(t) }[/math] being equal to [math]\displaystyle{ n }[/math] is given by:

- [math]\displaystyle{ \Pr \{N(t)=n\}=\frac{(\lambda t)^n}{n!} e^{-\lambda t}. }[/math]

The Poisson counting process can also be defined by stating that the time differences between events of the counting process are exponential variables with mean [math]\displaystyle{ 1/\lambda }[/math].[47] The time differences between the events or arrivals are known as interarrival [48] or interoccurence times.[47]

Interpreted as a point process on the real line

Interpreted as a point process, a Poisson point process can be defined on the real line by considering the number of points of the process in the interval [math]\displaystyle{ (a,b] }[/math]. For the homogeneous Poisson point process on the real line with parameter [math]\displaystyle{ \lambda\gt 0 }[/math], the probability of this random number of points, written here as [math]\displaystyle{ N(a,b] }[/math], being equal to some counting number [math]\displaystyle{ n }[/math] is given by:[49]

- [math]\displaystyle{ \Pr \{N(a,b]=n\}=\frac{[\lambda(b-a)]^n}{n!} e^{-\lambda (b-a)}, }[/math]

For some positive integer [math]\displaystyle{ k }[/math], the homogeneous Poisson point process has the finite-dimensional distribution given by:[49]

- [math]\displaystyle{ \Pr \{N(a_i,b_i]=n_i, i=1, \dots, k\} = \prod_{i=1}^k\frac{[\lambda(b_i-a_i)]^{n_i}}{n_i!} e^{-\lambda(b_i-a_i)}, }[/math]

where the real numbers [math]\displaystyle{ a_i\lt b_i\leq a_{i+1} }[/math].

In other words, [math]\displaystyle{ N(a,b] }[/math] is a Poisson random variable with mean [math]\displaystyle{ \lambda(b-a) }[/math], where [math]\displaystyle{ a\le b }[/math]. Furthermore, the number of points in any two disjoint intervals, say, [math]\displaystyle{ (a_1,b_1] }[/math] and [math]\displaystyle{ (a_2,b_2] }[/math] are independent of each other, and this extends to any finite number of disjoint intervals.[49] In the queueing theory context, one can consider a point existing (in an interval) as an event, but this is different to the word event in the probability theory sense.[lower-alpha 2] It follows that [math]\displaystyle{ \lambda }[/math] is the expected number of arrivals that occur per unit of time.[34]

Key properties

The previous definition has two important features shared by Poisson point processes in general:[49][27]

- the number of arrivals in each finite interval has a Poisson distribution;

- the number of arrivals in disjoint intervals are independent random variables.

Furthermore, it has a third feature related to just the homogeneous Poisson point process:[50]

- the Poisson distribution of the number of arrivals in each interval [math]\displaystyle{ (a+t,b+t] }[/math] only depends on the interval's length [math]\displaystyle{ b-a }[/math].

In other words, for any finite [math]\displaystyle{ t\gt 0 }[/math], the random variable [math]\displaystyle{ N(a+t,b+t] }[/math] is independent of [math]\displaystyle{ t }[/math], so it is also called a stationary Poisson process.[49]

Law of large numbers

The quantity [math]\displaystyle{ \lambda(b_i-a_i) }[/math] can be interpreted as the expected or average number of points occurring in the interval [math]\displaystyle{ (a_i,b_i] }[/math], namely:

- [math]\displaystyle{ \operatorname E[N(a_i,b_i]] =\lambda(b_i-a_i), }[/math]

where [math]\displaystyle{ \operatorname E }[/math] denotes the expectation operator. In other words, the parameter [math]\displaystyle{ \lambda }[/math] of the Poisson process coincides with the density of points. Furthermore, the homogeneous Poisson point process adheres to its own form of the (strong) law of large numbers.[51] More specifically, with probability one:

- [math]\displaystyle{ \lim_{t\rightarrow \infty} \frac{N(t)}{t} =\lambda, }[/math]

where [math]\displaystyle{ \lim }[/math] denotes the limit of a function, and [math]\displaystyle{ \lambda }[/math] is expected number of arrivals occurred per unit of time.

Memoryless property

The distance between two consecutive points of a point process on the real line will be an exponential random variable with parameter [math]\displaystyle{ \lambda }[/math] (or equivalently, mean [math]\displaystyle{ 1/\lambda }[/math]). This implies that the points have the memoryless property: the existence of one point existing in a finite interval does not affect the probability (distribution) of other points existing,[52][53] but this property has no natural equivalence when the Poisson process is defined on a space with higher dimensions.[54]

Orderliness and simplicity

A point process with stationary increments is sometimes said to be orderly[55] or regular if:[56]

- [math]\displaystyle{ \Pr \{ N(t,t+\delta]\gt 1 \} = o(\delta), }[/math]

where little-o notation is being used. A point process is called a simple point process when the probability of any of its two points coinciding in the same position, on the underlying space, is zero. For point processes in general on the real line, the property of orderliness implies that the process is simple,[57] which is the case for the homogeneous Poisson point process.[58]

Martingale characterization

On the real line, the homogeneous Poisson point process has a connection to the theory of martingales via the following characterization: a point process is the homogeneous Poisson point process if and only if

- [math]\displaystyle{ N(-\infty,t]-\lambda t, }[/math]

Relationship to other processes

On the real line, the Poisson process is a type of continuous-time Markov process known as a birth process, a special case of the birth–death process (with just births and zero deaths).[61][62] More complicated processes with the Markov property, such as Markov arrival processes, have been defined where the Poisson process is a special case.[47]

Restricted to the half-line

If the homogeneous Poisson process is considered just on the half-line [math]\displaystyle{ [0,\infty) }[/math], which can be the case when [math]\displaystyle{ t }[/math] represents time[31] then the resulting process is not truly invariant under translation.[54] In that case the Poisson process is no longer stationary, according to some definitions of stationarity.[28]

Applications

There have been many applications of the homogeneous Poisson process on the real line in an attempt to model seemingly random and independent events occurring. It has a fundamental role in queueing theory, which is the probability field of developing suitable stochastic models to represent the random arrival and departure of certain phenomena.[16][47] For example, customers arriving and being served or phone calls arriving at a phone exchange can be both studied with techniques from queueing theory.

Generalizations

The homogeneous Poisson process on the real line is considered one of the simplest stochastic processes for counting random numbers of points.[63][64] This process can be generalized in a number of ways. One possible generalization is to extend the distribution of interarrival times from the exponential distribution to other distributions, which introduces the stochastic process known as a renewal process. Another generalization is to define the Poisson point process on higher dimensional spaces such as the plane.[65]

Spatial Poisson point process

A spatial Poisson process is a Poisson point process defined in the plane [math]\displaystyle{ \textstyle \mathbb{R}^2 }[/math].[59][66] For its mathematical definition, one first considers a bounded, open or closed (or more precisely, Borel measurable) region [math]\displaystyle{ B }[/math] of the plane. The number of points of a point process [math]\displaystyle{ \textstyle N }[/math] existing in this region [math]\displaystyle{ \textstyle B\subset \mathbb{R}^2 }[/math] is a random variable, denoted by [math]\displaystyle{ \textstyle N(B) }[/math]. If the points belong to a homogeneous Poisson process with parameter [math]\displaystyle{ \textstyle \lambda\gt 0 }[/math], then the probability of [math]\displaystyle{ \textstyle n }[/math] points existing in [math]\displaystyle{ \textstyle B }[/math] is given by:

- [math]\displaystyle{ \Pr \{N(B)=n\}=\frac{(\lambda|B|)^n}{n!} e^{-\lambda|B|} }[/math]

where [math]\displaystyle{ \textstyle |B| }[/math] denotes the area of [math]\displaystyle{ \textstyle B }[/math].

For some finite integer [math]\displaystyle{ \textstyle k\geq 1 }[/math], we can give the finite-dimensional distribution of the homogeneous Poisson point process by first considering a collection of disjoint, bounded Borel (measurable) sets [math]\displaystyle{ \textstyle B_1,\dots,B_k }[/math]. The number of points of the point process [math]\displaystyle{ \textstyle N }[/math] existing in [math]\displaystyle{ \textstyle B_i }[/math] can be written as [math]\displaystyle{ \textstyle N(B_i) }[/math]. Then the homogeneous Poisson point process with parameter [math]\displaystyle{ \textstyle \lambda\gt 0 }[/math] has the finite-dimensional distribution:[67]

- [math]\displaystyle{ \Pr \{N(B_i)=n_i, i=1, \dots, k\}=\prod_{i=1}^k\frac{(\lambda|B_i|)^{n_i}}{n_i!}e^{-\lambda|B_i|}. }[/math]

Applications

The spatial Poisson point process features prominently in spatial statistics,[22][23] stochastic geometry, and continuum percolation theory.[24] This point process is applied in various physical sciences such as a model developed for alpha particles being detected. In recent years, it has been frequently used to model seemingly disordered spatial configurations of certain wireless communication networks.[18][19][20] For example, models for cellular or mobile phone networks have been developed where it is assumed the phone network transmitters, known as base stations, are positioned according to a homogeneous Poisson point process.

Defined in higher dimensions

The previous homogeneous Poisson point process immediately extends to higher dimensions by replacing the notion of area with (high dimensional) volume. For some bounded region [math]\displaystyle{ \textstyle B }[/math] of Euclidean space [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math], if the points form a homogeneous Poisson process with parameter [math]\displaystyle{ \textstyle \lambda\gt 0 }[/math], then the probability of [math]\displaystyle{ \textstyle n }[/math] points existing in [math]\displaystyle{ \textstyle B\subset \mathbb{R}^d }[/math] is given by:

- [math]\displaystyle{ \Pr \{N(B)=n\}=\frac{(\lambda|B|)^n}{n!}e^{-\lambda|B|} }[/math]

where [math]\displaystyle{ \textstyle |B| }[/math] now denotes the [math]\displaystyle{ \textstyle d }[/math]-dimensional volume of [math]\displaystyle{ \textstyle B }[/math]. Furthermore, for a collection of disjoint, bounded Borel sets [math]\displaystyle{ \textstyle B_1,\dots,B_k \subset \mathbb{R}^d }[/math], let [math]\displaystyle{ \textstyle N(B_i) }[/math] denote the number of points of [math]\displaystyle{ \textstyle N }[/math] existing in [math]\displaystyle{ \textstyle B_i }[/math]. Then the corresponding homogeneous Poisson point process with parameter [math]\displaystyle{ \textstyle \lambda\gt 0 }[/math] has the finite-dimensional distribution:[69]

- [math]\displaystyle{ \Pr \{N(B_i)=n_i, i=1, \dots, k\}=\prod_{i=1}^k\frac{(\lambda|B_i|)^{n_i}}{n_i!} e^{-\lambda|B_i|}. }[/math]

Homogeneous Poisson point processes do not depend on the position of the underlying space through its parameter [math]\displaystyle{ \textstyle \lambda }[/math], which implies it is both a stationary process (invariant to translation) and an isotropic (invariant to rotation) stochastic process.[28] Similarly to the one-dimensional case, the homogeneous point process is restricted to some bounded subset of [math]\displaystyle{ \mathbb{R}^d }[/math], then depending on some definitions of stationarity, the process is no longer stationary.[28][54]

Points are uniformly distributed

If the homogeneous point process is defined on the real line as a mathematical model for occurrences of some phenomenon, then it has the characteristic that the positions of these occurrences or events on the real line (often interpreted as time) will be uniformly distributed. More specifically, if an event occurs (according to this process) in an interval [math]\displaystyle{ \textstyle (a,b] }[/math] where [math]\displaystyle{ \textstyle a \leq b }[/math], then its location will be a uniform random variable defined on that interval.[67] Furthermore, the homogeneous point process is sometimes called the uniform Poisson point process (see Terminology). This uniformity property extends to higher dimensions in the Cartesian coordinate, but not in, for example, polar coordinates.[70][71]

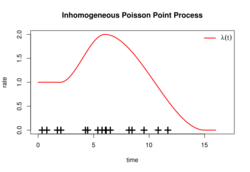

Inhomogeneous Poisson point process

The inhomogeneous or nonhomogeneous Poisson point process (see Terminology) is a Poisson point process with a Poisson parameter set as some location-dependent function in the underlying space on which the Poisson process is defined. For Euclidean space [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math], this is achieved by introducing a locally integrable positive function [math]\displaystyle{ \lambda\colon\mathbb{R}^d\to[0,\infty) }[/math], such that for every bounded region [math]\displaystyle{ \textstyle B }[/math] the ([math]\displaystyle{ \textstyle d }[/math]-dimensional) volume integral of [math]\displaystyle{ \textstyle \lambda (x) }[/math] over region [math]\displaystyle{ \textstyle B }[/math] is finite. In other words, if this integral, denoted by [math]\displaystyle{ \textstyle \Lambda (B) }[/math], is:[45]

- [math]\displaystyle{ \Lambda (B)=\int_B \lambda(x)\,\mathrm dx \lt \infty, }[/math]

where [math]\displaystyle{ \textstyle{\mathrm dx} }[/math] is a ([math]\displaystyle{ \textstyle d }[/math]-dimensional) volume element,[lower-alpha 3] then for every collection of disjoint bounded Borel measurable sets [math]\displaystyle{ \textstyle B_1,\dots,B_k }[/math], an inhomogeneous Poisson process with (intensity) function [math]\displaystyle{ \textstyle \lambda(x) }[/math] has the finite-dimensional distribution:[69]

- [math]\displaystyle{ \Pr \{N(B_i)=n_i, i=1, \dots, k\}=\prod_{i=1}^k\frac{(\Lambda(B_i))^{n_i}}{n_i!} e^{-\Lambda(B_i)}. }[/math]

Furthermore, [math]\displaystyle{ \textstyle \Lambda (B) }[/math] has the interpretation of being the expected number of points of the Poisson process located in the bounded region [math]\displaystyle{ \textstyle B }[/math], namely

- [math]\displaystyle{ \Lambda (B)= \operatorname E[N(B)] . }[/math]

Defined on the real line

On the real line, the inhomogeneous or non-homogeneous Poisson point process has mean measure given by a one-dimensional integral. For two real numbers [math]\displaystyle{ \textstyle a }[/math] and [math]\displaystyle{ \textstyle b }[/math], where [math]\displaystyle{ \textstyle a\leq b }[/math], denote by [math]\displaystyle{ \textstyle N(a,b] }[/math] the number points of an inhomogeneous Poisson process with intensity function [math]\displaystyle{ \textstyle \lambda(t) }[/math] occurring in the interval [math]\displaystyle{ \textstyle (a,b] }[/math]. The probability of [math]\displaystyle{ \textstyle n }[/math] points existing in the above interval [math]\displaystyle{ \textstyle (a,b] }[/math] is given by:

- [math]\displaystyle{ \Pr \{N(a,b]=n\}=\frac{[\Lambda(a,b)]^n}{n!} e^{-\Lambda(a,b)}. }[/math]

where the mean or intensity measure is:

- [math]\displaystyle{ \Lambda(a,b)=\int_a^b \lambda (t)\,\mathrm dt, }[/math]

which means that the random variable [math]\displaystyle{ \textstyle N(a,b] }[/math] is a Poisson random variable with mean [math]\displaystyle{ \textstyle \operatorname E[N(a,b]] = \Lambda(a,b) }[/math].

A feature of the one-dimension setting, is that an inhomogeneous Poisson process can be transformed into a homogeneous by a monotone transformation or mapping, which is achieved with the inverse of [math]\displaystyle{ \textstyle \Lambda }[/math].[72][73]

Counting process interpretation

The inhomogeneous Poisson point process, when considered on the positive half-line, is also sometimes defined as a counting process. With this interpretation, the process, which is sometimes written as [math]\displaystyle{ \textstyle \{N(t), t\geq 0\} }[/math], represents the total number of occurrences or events that have happened up to and including time [math]\displaystyle{ \textstyle t }[/math]. A counting process is said to be an inhomogeneous Poisson counting process if it has the four properties:[34][74]

- [math]\displaystyle{ \textstyle N(0)=0; }[/math]

- has independent increments;

- [math]\displaystyle{ \textstyle \Pr\{ N(t+h) - N(t)=1 \} =\lambda(t)h + o(h); }[/math] and

- [math]\displaystyle{ \textstyle \Pr \{ N(t+h) - N(t)\ge 2 \} = o(h), }[/math]

where [math]\displaystyle{ \textstyle o(h) }[/math] is asymptotic or little-o notation for [math]\displaystyle{ \textstyle o(h)/h\rightarrow 0 }[/math] as [math]\displaystyle{ \textstyle h\rightarrow 0 }[/math]. In the case of point processes with refractoriness (e.g., neural spike trains) a stronger version of property 4 applies:[75] [math]\displaystyle{ \Pr \{N(t+h)-N(t) \ge 2\} = o(h^2) }[/math].

The above properties imply that [math]\displaystyle{ \textstyle N(t+h) - N(t) }[/math] is a Poisson random variable with the parameter (or mean)

- [math]\displaystyle{ \operatorname E[N(t+h) - N(t)] = \int_t^{t+h}\lambda (s) \, ds, }[/math]

which implies

- [math]\displaystyle{ \operatorname E[N(h)]=\int_0^h \lambda (s) \, ds. }[/math]

Spatial Poisson process

An inhomogeneous Poisson process defined in the plane [math]\displaystyle{ \textstyle \mathbb{R}^2 }[/math] is called a spatial Poisson process[17] It is defined with intensity function and its intensity measure is obtained performing a surface integral of its intensity function over some region.[21][76] For example, its intensity function (as a function of Cartesian coordinates [math]\displaystyle{ x }[/math] and [math]\displaystyle{ \textstyle y }[/math]) can be

- [math]\displaystyle{ \lambda(x,y)= e^{-(x^2+y^2)}, }[/math]

so the corresponding intensity measure is given by the surface integral

- [math]\displaystyle{ \Lambda(B)= \int_B e^{-(x^2+y^2)}\,\mathrm dx\,\mathrm dy, }[/math]

where [math]\displaystyle{ B }[/math] is some bounded region in the plane [math]\displaystyle{ \mathbb{R}^2 }[/math].

In higher dimensions

In the plane, [math]\displaystyle{ \Lambda(B) }[/math] corresponds to a surface integral while in [math]\displaystyle{ \mathbb{R}^d }[/math] the integral becomes a ([math]\displaystyle{ d }[/math]-dimensional) volume integral.

Applications

When the real line is interpreted as time, the inhomogeneous process is used in the fields of counting processes and in queueing theory.[74][77] Examples of phenomena which have been represented by or appear as an inhomogeneous Poisson point process include:

In the plane, the Poisson point process is important in the related disciplines of stochastic geometry[1][35] and spatial statistics.[22][23] The intensity measure of this point process is dependent on the location of underlying space, which means it can be used to model phenomena with a density that varies over some region. In other words, the phenomena can be represented as points that have a location-dependent density.[21] This processes has been used in various disciplines and uses include the study of salmon and sea lice in the oceans,[80] forestry,[5] and search problems.[81]

Interpretation of the intensity function

The Poisson intensity function [math]\displaystyle{ \lambda(x) }[/math] has an interpretation, considered intuitive,[21] with the volume element [math]\displaystyle{ \mathrm dx }[/math] in the infinitesimal sense: [math]\displaystyle{ \lambda(x)\,\mathrm dx }[/math] is the infinitesimal probability of a point of a Poisson point process existing in a region of space with volume [math]\displaystyle{ \mathrm dx }[/math] located at [math]\displaystyle{ x }[/math].[21]

For example, given a homogeneous Poisson point process on the real line, the probability of finding a single point of the process in a small interval of width [math]\displaystyle{ \delta }[/math] is approximately [math]\displaystyle{ \lambda \delta }[/math]. In fact, such intuition is how the Poisson point process is sometimes introduced and its distribution derived.[82][43][83]

Simple point process

If a Poisson point process has an intensity measure that is a locally finite and diffuse (or non-atomic), then it is a simple point process. For a simple point process, the probability of a point existing at a single point or location in the underlying (state) space is either zero or one. This implies that, with probability one, no two (or more) points of a Poisson point process coincide in location in the underlying space.[84][19][85]

Simulation

Simulating a Poisson point process on a computer is usually done in a bounded region of space, known as a simulation window, and requires two steps: appropriately creating a random number of points and then suitably placing the points in a random manner. Both these two steps depend on the specific Poisson point process that is being simulated.[86][87]

Step 1: Number of points

The number of points [math]\displaystyle{ N }[/math] in the window, denoted here by [math]\displaystyle{ W }[/math], needs to be simulated, which is done by using a (pseudo)-random number generating function capable of simulating Poisson random variables.

Homogeneous case

For the homogeneous case with the constant [math]\displaystyle{ \lambda }[/math], the mean of the Poisson random variable [math]\displaystyle{ N }[/math] is set to [math]\displaystyle{ \lambda |W| }[/math] where [math]\displaystyle{ |W| }[/math] is the length, area or ([math]\displaystyle{ d }[/math]-dimensional) volume of [math]\displaystyle{ W }[/math].

Inhomogeneous case

For the inhomogeneous case, [math]\displaystyle{ \lambda |W| }[/math] is replaced with the ([math]\displaystyle{ d }[/math]-dimensional) volume integral

- [math]\displaystyle{ \Lambda(W)=\int_W\lambda(x)\,\mathrm dx }[/math]

Step 2: Positioning of points

The second stage requires randomly placing the [math]\displaystyle{ \textstyle N }[/math] points in the window [math]\displaystyle{ \textstyle W }[/math].

Homogeneous case

For the homogeneous case in one dimension, all points are uniformly and independently placed in the window or interval [math]\displaystyle{ \textstyle W }[/math]. For higher dimensions in a Cartesian coordinate system, each coordinate is uniformly and independently placed in the window [math]\displaystyle{ \textstyle W }[/math]. If the window is not a subspace of Cartesian space (for example, inside a unit sphere or on the surface of a unit sphere), then the points will not be uniformly placed in [math]\displaystyle{ \textstyle W }[/math], and suitable change of coordinates (from Cartesian) are needed.[86]

Inhomogeneous case

For the inhomogeneous case, a couple of different methods can be used depending on the nature of the intensity function [math]\displaystyle{ \textstyle \lambda(x) }[/math].[86] If the intensity function is sufficiently simple, then independent and random non-uniform (Cartesian or other) coordinates of the points can be generated. For example, simulating a Poisson point process on a circular window can be done for an isotropic intensity function (in polar coordinates [math]\displaystyle{ \textstyle r }[/math] and [math]\displaystyle{ \textstyle \theta }[/math]), implying it is rotationally variant or independent of [math]\displaystyle{ \textstyle \theta }[/math] but dependent on [math]\displaystyle{ \textstyle r }[/math], by a change of variable in [math]\displaystyle{ \textstyle r }[/math] if the intensity function is sufficiently simple.[86]

For more complicated intensity functions, one can use an acceptance-rejection method, which consists of using (or 'accepting') only certain random points and not using (or 'rejecting') the other points, based on the ratio:[88]

- [math]\displaystyle{ \frac{\lambda(x_i)}{\Lambda(W)}=\frac{\lambda(x_i)}{\int_W\lambda(x)\,\mathrm dx. } }[/math]

where [math]\displaystyle{ \textstyle x_i }[/math] is the point under consideration for acceptance or rejection.

General Poisson point process

In measure theory, the Poisson point process can be further generalized to what is sometimes known as the general Poisson point process[21][89] or general Poisson process[76] by using a Radon measure [math]\displaystyle{ \textstyle \Lambda }[/math], which is a locally finite measure. In general, this Radon measure [math]\displaystyle{ \textstyle \Lambda }[/math] can be atomic, which means multiple points of the Poisson point process can exist in the same location of the underlying space. In this situation, the number of points at [math]\displaystyle{ \textstyle x }[/math] is a Poisson random variable with mean [math]\displaystyle{ \textstyle \Lambda({x}) }[/math].[89] But sometimes the converse is assumed, so the Radon measure [math]\displaystyle{ \textstyle \Lambda }[/math] is diffuse or non-atomic.[21]

A point process [math]\displaystyle{ \textstyle {N} }[/math] is a general Poisson point process with intensity [math]\displaystyle{ \textstyle \Lambda }[/math] if it has the two following properties:[21]

- the number of points in a bounded Borel set [math]\displaystyle{ \textstyle B }[/math] is a Poisson random variable with mean [math]\displaystyle{ \textstyle \Lambda(B) }[/math]. In other words, denote the total number of points located in [math]\displaystyle{ \textstyle B }[/math] by [math]\displaystyle{ \textstyle {N}(B) }[/math], then the probability of random variable [math]\displaystyle{ \textstyle {N}(B) }[/math] being equal to [math]\displaystyle{ \textstyle n }[/math] is given by:

- [math]\displaystyle{ \Pr \{ N(B)=n\}=\frac{(\Lambda(B))^n}{n!} e^{-\Lambda(B)} }[/math]

- the number of points in [math]\displaystyle{ \textstyle n }[/math] disjoint Borel sets forms [math]\displaystyle{ \textstyle n }[/math] independent random variables.

The Radon measure [math]\displaystyle{ \textstyle \Lambda }[/math] maintains its previous interpretation of being the expected number of points of [math]\displaystyle{ \textstyle {N} }[/math] located in the bounded region [math]\displaystyle{ \textstyle B }[/math], namely

- [math]\displaystyle{ \Lambda (B)= \operatorname E[N(B)] . }[/math]

Furthermore, if [math]\displaystyle{ \textstyle \Lambda }[/math] is absolutely continuous such that it has a density (which is the Radon–Nikodym density or derivative) with respect to the Lebesgue measure, then for all Borel sets [math]\displaystyle{ \textstyle B }[/math] it can be written as:

- [math]\displaystyle{ \Lambda (B)=\int_B \lambda(x)\,\mathrm dx, }[/math]

where the density [math]\displaystyle{ \textstyle \lambda(x) }[/math] is known, among other terms, as the intensity function.

History

Poisson distribution

Despite its name, the Poisson point process was neither discovered nor studied by the French mathematician Siméon Denis Poisson; the name is cited as an example of Stigler's law.[14][15] The name stems from its inherent relation to the Poisson distribution, derived by Poisson as a limiting case of the binomial distribution.[90] This describes the probability of the sum of [math]\displaystyle{ \textstyle n }[/math] Bernoulli trials with probability [math]\displaystyle{ \textstyle p }[/math], often likened to the number of heads (or tails) after [math]\displaystyle{ \textstyle n }[/math] biased flips of a coin with the probability of a head (or tail) occurring being [math]\displaystyle{ \textstyle p }[/math]. For some positive constant [math]\displaystyle{ \textstyle \Lambda\gt 0 }[/math], as [math]\displaystyle{ \textstyle n }[/math] increases towards infinity and [math]\displaystyle{ \textstyle p }[/math] decreases towards zero such that the product [math]\displaystyle{ \textstyle np=\Lambda }[/math] is fixed, the Poisson distribution more closely approximates that of the binomial.[91]

Poisson derived the Poisson distribution, published in 1841, by examining the binomial distribution in the limit of [math]\displaystyle{ \textstyle p }[/math] (to zero) and [math]\displaystyle{ \textstyle n }[/math] (to infinity). It only appears once in all of Poisson's work,[92] and the result was not well known during his time. Over the following years a number of people used the distribution without citing Poisson, including Philipp Ludwig von Seidel and Ernst Abbe.[93] [14] At the end of the 19th century, Ladislaus Bortkiewicz would study the distribution again in a different setting (citing Poisson), using the distribution with real data to study the number of deaths from horse kicks in the Prussian army.[90][94]

Discovery

There are a number of claims for early uses or discoveries of the Poisson point process.[14][15] For example, John Michell in 1767, a decade before Poisson was born, was interested in the probability a star being within a certain region of another star under the assumption that the stars were "scattered by mere chance", and studied an example consisting of the six brightest stars in the Pleiades, without deriving the Poisson distribution. This work inspired Simon Newcomb to study the problem and to calculate the Poisson distribution as an approximation for the binomial distribution in 1860.[15]

At the beginning of the 20th century the Poisson process (in one dimension) would arise independently in different situations.[14][15] In Sweden 1903, Filip Lundberg published a thesis containing work, now considered fundamental and pioneering, where he proposed to model insurance claims with a homogeneous Poisson process.[95][96]

In Denmark in 1909 another discovery occurred when A.K. Erlang derived the Poisson distribution when developing a mathematical model for the number of incoming phone calls in a finite time interval. Erlang was not at the time aware of Poisson's earlier work and assumed that the number phone calls arriving in each interval of time were independent to each other. He then found the limiting case, which is effectively recasting the Poisson distribution as a limit of the binomial distribution.[14]

In 1910 Ernest Rutherford and Hans Geiger published experimental results on counting alpha particles. Their experimental work had mathematical contributions from Harry Bateman, who derived Poisson probabilities as a solution to a family of differential equations, though the solution had been derived earlier, resulting in the independent discovery of the Poisson process.[14] After this time there were many studies and applications of the Poisson process, but its early history is complicated, which has been explained by the various applications of the process in numerous fields by biologists, ecologists, engineers and various physical scientists.[14]

Early applications

The years after 1909 led to a number of studies and applications of the Poisson point process, however, its early history is complex, which has been explained by the various applications of the process in numerous fields by biologists, ecologists, engineers and others working in the physical sciences. The early results were published in different languages and in different settings, with no standard terminology and notation used.[14] For example, in 1922 Swedish chemist and Nobel Laureate Theodor Svedberg proposed a model in which a spatial Poisson point process is the underlying process to study how plants are distributed in plant communities.[97] A number of mathematicians started studying the process in the early 1930s, and important contributions were made by Andrey Kolmogorov, William Feller and Aleksandr Khinchin,[14] among others.[98] In the field of teletraffic engineering, mathematicians and statisticians studied and used Poisson and other point processes.[99]

History of terms

The Swede Conny Palm in his 1943 dissertation studied the Poisson and other point processes in the one-dimensional setting by examining them in terms of the statistical or stochastic dependence between the points in time.[100][99] In his work exists the first known recorded use of the term point processes as Punktprozesse in German.[100][15]

It is believed[14] that William Feller was the first in print to refer to it as the Poisson process in a 1940 paper. Although the Swede Ove Lundberg used the term Poisson process in his 1940 PhD dissertation,[15] in which Feller was acknowledged as an influence,[101] it has been claimed that Feller coined the term before 1940.[91] It has been remarked that both Feller and Lundberg used the term as though it were well-known, implying it was already in spoken use by then.[15] Feller worked from 1936 to 1939 alongside Harald Cramér at Stockholm University, where Lundberg was a PhD student under Cramér who did not use the term Poisson process in a book by him, finished in 1936, but did in subsequent editions, which his has led to the speculation that the term Poisson process was coined sometime between 1936 and 1939 at the Stockholm University.[15]

Terminology

The terminology of point process theory in general has been criticized for being too varied.[15] In addition to the word point often being omitted,[65][2] the homogeneous Poisson (point) process is also called a stationary Poisson (point) process,[49] as well as uniform Poisson (point) process.[44] The inhomogeneous Poisson point process, as well as being called nonhomogeneous,[49] is also referred to as the non-stationary Poisson process.[74][102]

The term point process has been criticized, as the term process can suggest over time and space, so random point field,[103] resulting in the terms Poisson random point field or Poisson point field being also used.[104] A point process is considered, and sometimes called, a random counting measure,[105] hence the Poisson point process is also referred to as a Poisson random measure,[106] a term used in the study of Lévy processes,[106][107] but some choose to use the two terms for Poisson points processes defined on two different underlying spaces.[108]

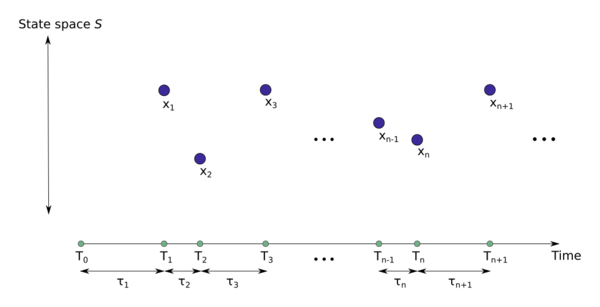

The underlying mathematical space of the Poisson point process is called a carrier space,[109][110] or state space, though the latter term has a different meaning in the context of stochastic processes. In the context of point processes, the term "state space" can mean the space on which the point process is defined such as the real line,[111][112] which corresponds to the index set[113] or parameter set[114] in stochastic process terminology.

The measure [math]\displaystyle{ \textstyle \Lambda }[/math] is called the intensity measure,[115] mean measure,[38] or parameter measure,[69] as there are no standard terms.[38] If [math]\displaystyle{ \textstyle \Lambda }[/math] has a derivative or density, denoted by [math]\displaystyle{ \textstyle \lambda(x) }[/math], is called the intensity function of the Poisson point process.[21] For the homogeneous Poisson point process, the derivative of the intensity measure is simply a constant [math]\displaystyle{ \textstyle \lambda\gt 0 }[/math], which can be referred to as the rate, usually when the underlying space is the real line, or the intensity.[44] It is also called the mean rate or the mean density[116] or rate .[34] For [math]\displaystyle{ \textstyle \lambda=1 }[/math], the corresponding process is sometimes referred to as the standard Poisson (point) process.[45][59][117]

The extent of the Poisson point process is sometimes called the exposure.[118][119]

Notation

The notation of the Poisson point process depends on its setting and the field it is being applied in. For example, on the real line, the Poisson process, both homogeneous or inhomogeneous, is sometimes interpreted as a counting process, and the notation [math]\displaystyle{ \textstyle \{N(t), t\geq 0\} }[/math] is used to represent the Poisson process.[31][34]

Another reason for varying notation is due to the theory of point processes, which has a couple of mathematical interpretations. For example, a simple Poisson point process may be considered as a random set, which suggests the notation [math]\displaystyle{ \textstyle x\in N }[/math], implying that [math]\displaystyle{ \textstyle x }[/math] is a random point belonging to or being an element of the Poisson point process [math]\displaystyle{ \textstyle N }[/math]. Another, more general, interpretation is to consider a Poisson or any other point process as a random counting measure, so one can write the number of points of a Poisson point process [math]\displaystyle{ \textstyle {N} }[/math] being found or located in some (Borel measurable) region [math]\displaystyle{ \textstyle B }[/math] as [math]\displaystyle{ \textstyle N(B) }[/math], which is a random variable. These different interpretations results in notation being used from mathematical fields such as measure theory and set theory.[120]

For general point processes, sometimes a subscript on the point symbol, for example [math]\displaystyle{ \textstyle x }[/math], is included so one writes (with set notation) [math]\displaystyle{ \textstyle x_i\in N }[/math] instead of [math]\displaystyle{ \textstyle x\in N }[/math], and [math]\displaystyle{ \textstyle x }[/math] can be used for the bound variable in integral expressions such as Campbell's theorem, instead of denoting random points.[19] Sometimes an uppercase letter denotes the point process, while a lowercase denotes a point from the process, so, for example, the point [math]\displaystyle{ \textstyle x }[/math] or [math]\displaystyle{ \textstyle x_i }[/math] belongs to or is a point of the point process [math]\displaystyle{ \textstyle X }[/math], and be written with set notation as [math]\displaystyle{ \textstyle x\in X }[/math] or [math]\displaystyle{ \textstyle x_i\in X }[/math].[112]

Furthermore, the set theory and integral or measure theory notation can be used interchangeably. For example, for a point process [math]\displaystyle{ \textstyle N }[/math] defined on the Euclidean state space [math]\displaystyle{ \textstyle {\mathbb{R}^d} }[/math] and a (measurable) function [math]\displaystyle{ \textstyle f }[/math] on [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math] , the expression

- [math]\displaystyle{ \int_{\mathbb{R}^d} f(x)\,\mathrm dN(x)=\sum\limits_{x_i\in N} f(x_i), }[/math]

demonstrates two different ways to write a summation over a point process (see also Campbell's theorem (probability)). More specifically, the integral notation on the left-hand side is interpreting the point process as a random counting measure while the sum on the right-hand side suggests a random set interpretation.[120]

Functionals and moment measures

In probability theory, operations are applied to random variables for different purposes. Sometimes these operations are regular expectations that produce the average or variance of a random variable. Others, such as characteristic functions (or Laplace transforms) of a random variable can be used to uniquely identify or characterize random variables and prove results like the central limit theorem.[121] In the theory of point processes there exist analogous mathematical tools which usually exist in the forms of measures and functionals instead of moments and functions respectively.[122][123]

Laplace functionals

For a Poisson point process [math]\displaystyle{ \textstyle N }[/math] with intensity measure [math]\displaystyle{ \textstyle \Lambda }[/math] on some space [math]\displaystyle{ X }[/math], the Laplace functional is given by:[19]

- [math]\displaystyle{ L_N(f)= \mathbb{E} e^{-\int_X f(x)\, N(\mathrm dx)} = e^{-\int_{X}(1-e^{-f(x)})\Lambda(\mathrm dx)}, }[/math]

One version of Campbell's theorem involves the Laplace functional of the Poisson point process.

Probability generating functionals

The probability generating function of non-negative integer-valued random variable leads to the probability generating functional being defined analogously with respect to any non-negative bounded function [math]\displaystyle{ \textstyle v }[/math] on [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math] such that [math]\displaystyle{ \textstyle 0\leq v(x) \leq 1 }[/math]. For a point process [math]\displaystyle{ \textstyle {N} }[/math] the probability generating functional is defined as:[124]

- [math]\displaystyle{ G(v)=\operatorname E \left[\prod_{x\in N} v(x) \right] }[/math]

where the product is performed for all the points in [math]\displaystyle{ N }[/math]. If the intensity measure [math]\displaystyle{ \textstyle \Lambda }[/math] of [math]\displaystyle{ \textstyle {N} }[/math] is locally finite, then the [math]\displaystyle{ G }[/math] is well-defined for any measurable function [math]\displaystyle{ \textstyle u }[/math] on [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math]. For a Poisson point process with intensity measure [math]\displaystyle{ \textstyle \Lambda }[/math] the generating functional is given by:

- [math]\displaystyle{ G(v)=e^{-\int_{\mathbb{R}^d} [1-v(x)]\,\Lambda(\mathrm dx)}, }[/math]

which in the homogeneous case reduces to

- [math]\displaystyle{ G(v)=e^{-\lambda\int_{\mathbb{R}^d} [1-v(x)]\,\mathrm dx}. }[/math]

Moment measure

For a general Poisson point process with intensity measure [math]\displaystyle{ \textstyle \Lambda }[/math] the first moment measure is its intensity measure:[19][20]

- [math]\displaystyle{ M^1(B)=\Lambda(B), }[/math]

which for a homogeneous Poisson point process with constant intensity [math]\displaystyle{ \textstyle \lambda }[/math] means:

- [math]\displaystyle{ M^1(B)=\lambda|B|, }[/math]

where [math]\displaystyle{ \textstyle |B| }[/math] is the length, area or volume (or more generally, the Lebesgue measure) of [math]\displaystyle{ \textstyle B }[/math].

The Mecke equation

The Mecke equation characterizes the Poisson point process. Let [math]\displaystyle{ \mathbb{N}_\sigma }[/math] be the space of all [math]\displaystyle{ \sigma }[/math]-finite measures on some general space [math]\displaystyle{ \mathcal{Q} }[/math]. A point process [math]\displaystyle{ \eta }[/math] with intensity [math]\displaystyle{ \lambda }[/math] on [math]\displaystyle{ \mathcal{Q} }[/math] is a Poisson point process if and only if for all measurable functions [math]\displaystyle{ f:\mathcal{Q}\times\mathbb{N}_\sigma\to \mathbb{R}_+ }[/math] the following holds

- [math]\displaystyle{ \Pr \left[\int f(x,\eta)\eta(\mathrm{d}x)\right]=\int \Pr \left[ f(x,\eta+\delta_x) \right] \lambda(\mathrm{d}x) }[/math]

For further details see.[125]

Factorial moment measure

For a general Poisson point process with intensity measure [math]\displaystyle{ \textstyle \Lambda }[/math] the [math]\displaystyle{ \textstyle n }[/math]-th factorial moment measure is given by the expression:[126]

- [math]\displaystyle{ M^{(n)}(B_1\times\cdots\times B_n)=\prod_{i=1}^n[\Lambda(B_i)], }[/math]

where [math]\displaystyle{ \textstyle \Lambda }[/math] is the intensity measure or first moment measure of [math]\displaystyle{ \textstyle {N} }[/math], which for some Borel set [math]\displaystyle{ \textstyle B }[/math] is given by

- [math]\displaystyle{ \Lambda(B)=M^1(B)=\operatorname E[N(B)]. }[/math]

For a homogeneous Poisson point process the [math]\displaystyle{ \textstyle n }[/math]-th factorial moment measure is simply:[19][20]

- [math]\displaystyle{ M^{(n)}(B_1\times\cdots\times B_n)=\lambda^n \prod_{i=1}^n |B_i|, }[/math]

where [math]\displaystyle{ \textstyle |B_i| }[/math] is the length, area, or volume (or more generally, the Lebesgue measure) of [math]\displaystyle{ \textstyle B_i }[/math]. Furthermore, the [math]\displaystyle{ \textstyle n }[/math]-th factorial moment density is:[126]

- [math]\displaystyle{ \mu^{(n)}(x_1,\dots,x_n)=\lambda^n. }[/math]

Avoidance function

The avoidance function [71] or void probability [120] [math]\displaystyle{ \textstyle v }[/math] of a point process [math]\displaystyle{ \textstyle {N} }[/math] is defined in relation to some set [math]\displaystyle{ \textstyle B }[/math], which is a subset of the underlying space [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math], as the probability of no points of [math]\displaystyle{ \textstyle {N} }[/math] existing in [math]\displaystyle{ \textstyle B }[/math]. More precisely,[127] for a test set [math]\displaystyle{ \textstyle B }[/math], the avoidance function is given by:

- [math]\displaystyle{ v(B)=\Pr \{N(B)=0\}. }[/math]

For a general Poisson point process [math]\displaystyle{ \textstyle {N} }[/math] with intensity measure [math]\displaystyle{ \textstyle \Lambda }[/math], its avoidance function is given by:

- [math]\displaystyle{ v(B)=e^{-\Lambda(B)} }[/math]

Rényi's theorem

Simple point processes are completely characterized by their void probabilities.[128] In other words, complete information of a simple point process is captured entirely in its void probabilities, and two simple point processes have the same void probabilities if and if only if they are the same point processes. The case for Poisson process is sometimes known as Rényi's theorem, which is named after Alfréd Rényi who discovered the result for the case of a homogeneous point process in one-dimension.[129]

In one form,[129] the Rényi's theorem says for a diffuse (or non-atomic) Radon measure [math]\displaystyle{ \textstyle \Lambda }[/math] on [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math] and a set [math]\displaystyle{ \textstyle A }[/math] is a finite union of rectangles (so not Borel[lower-alpha 4]) that if [math]\displaystyle{ \textstyle N }[/math] is a countable subset of [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math] such that:

- [math]\displaystyle{ \Pr \{N(A)=0\} = v(A) = e^{-\Lambda(A)} }[/math]

then [math]\displaystyle{ \textstyle {N} }[/math] is a Poisson point process with intensity measure [math]\displaystyle{ \textstyle \Lambda }[/math].

Point process operations

Mathematical operations can be performed on point processes to get new point processes and develop new mathematical models for the locations of certain objects. One example of an operation is known as thinning which entails deleting or removing the points of some point process according to a rule, creating a new process with the remaining points (the deleted points also form a point process).[131]

Thinning

For the Poisson process, the independent [math]\displaystyle{ \textstyle p(x) }[/math]-thinning operations results in another Poisson point process. More specifically, a [math]\displaystyle{ \textstyle p(x) }[/math]-thinning operation applied to a Poisson point process with intensity measure [math]\displaystyle{ \textstyle \Lambda }[/math] gives a point process of removed points that is also Poisson point process [math]\displaystyle{ \textstyle {N}_p }[/math] with intensity measure [math]\displaystyle{ \textstyle \Lambda_p }[/math], which for a bounded Borel set [math]\displaystyle{ \textstyle B }[/math] is given by:

- [math]\displaystyle{ \Lambda_p(B)= \int_B p(x)\,\Lambda(\mathrm dx) }[/math]

This thinning result of the Poisson point process is sometimes known as Prekopa's theorem.[132] Furthermore, after randomly thinning a Poisson point process, the kept or remaining points also form a Poisson point process, which has the intensity measure

- [math]\displaystyle{ \Lambda_p(B)= \int_B (1-p(x))\,\Lambda(\mathrm dx). }[/math]

The two separate Poisson point processes formed respectively from the removed and kept points are stochastically independent of each other.[131] In other words, if a region is known to contain [math]\displaystyle{ \textstyle n }[/math] kept points (from the original Poisson point process), then this will have no influence on the random number of removed points in the same region. This ability to randomly create two independent Poisson point processes from one is sometimes known as splitting[133][134] the Poisson point process.

Superposition

If there is a countable collection of point processes [math]\displaystyle{ \textstyle N_1,N_2,\dots }[/math], then their superposition, or, in set theory language, their union, which is[135]

- [math]\displaystyle{ N=\bigcup_{i=1}^\infty N_i, }[/math]

also forms a point process. In other words, any points located in any of the point processes [math]\displaystyle{ \textstyle N_1,N_2\dots }[/math] will also be located in the superposition of these point processes [math]\displaystyle{ \textstyle {N} }[/math].

Superposition theorem

The superposition theorem of the Poisson point process says that the superposition of independent Poisson point processes [math]\displaystyle{ \textstyle N_1,N_2\dots }[/math] with mean measures [math]\displaystyle{ \textstyle \Lambda_1,\Lambda_2,\dots }[/math] will also be a Poisson point process with mean measure[136][91]

- [math]\displaystyle{ \Lambda=\sum_{i=1}^\infty \Lambda_i. }[/math]

In other words, the union of two (or countably more) Poisson processes is another Poisson process. If a point [math]\displaystyle{ x }[/math] is sampled from a countable [math]\displaystyle{ n }[/math] union of Poisson processes, then the probability that the point [math]\displaystyle{ \textstyle x }[/math] belongs to the [math]\displaystyle{ j }[/math]th Poisson process [math]\displaystyle{ N_j }[/math] is given by:

- [math]\displaystyle{ \Pr \{x\in N_j\}=\frac{\Lambda_j}{\sum_{i=1}^n\Lambda_i}. }[/math]

For two homogeneous Poisson processes with intensities [math]\displaystyle{ \lambda_1,\lambda_2\dots }[/math], the two previous expressions reduce to

- [math]\displaystyle{ \lambda=\sum_{i=1}^\infty \lambda_i, }[/math]

and

- [math]\displaystyle{ \Pr \{x\in N_j\}=\frac{\lambda_j}{\sum_{i=1}^n \lambda_i}. }[/math]

Clustering

The operation clustering is performed when each point [math]\displaystyle{ \textstyle x }[/math] of some point process [math]\displaystyle{ \textstyle {N} }[/math] is replaced by another (possibly different) point process. If the original process [math]\displaystyle{ \textstyle {N} }[/math] is a Poisson point process, then the resulting process [math]\displaystyle{ \textstyle {N}_c }[/math] is called a Poisson cluster point process.

Random displacement

A mathematical model may require randomly moving points of a point process to other locations on the underlying mathematical space, which gives rise to a point process operation known as displacement [137] or translation.[138] The Poisson point process has been used to model, for example, the movement of plants between generations, owing to the displacement theorem,[137] which loosely says that the random independent displacement of points of a Poisson point process (on the same underlying space) forms another Poisson point process.

Displacement theorem

One version of the displacement theorem[137] involves a Poisson point process [math]\displaystyle{ \textstyle {N} }[/math] on [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math] with intensity function [math]\displaystyle{ \textstyle \lambda(x) }[/math]. It is then assumed the points of [math]\displaystyle{ \textstyle {N} }[/math] are randomly displaced somewhere else in [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math] so that each point's displacement is independent and that the displacement of a point formerly at [math]\displaystyle{ \textstyle x }[/math] is a random vector with a probability density [math]\displaystyle{ \textstyle \rho(x,\cdot) }[/math].[lower-alpha 5] Then the new point process [math]\displaystyle{ \textstyle N_D }[/math] is also a Poisson point process with intensity function

- [math]\displaystyle{ \lambda_D(y)=\int_{\mathbb{R}^d} \lambda(x) \rho(x,y)\,\mathrm dx. }[/math]

If the Poisson process is homogeneous with [math]\displaystyle{ \textstyle\lambda(x) = \lambda \gt 0 }[/math] and if [math]\displaystyle{ \rho(x, y) }[/math] is a function of [math]\displaystyle{ y-x }[/math], then

- [math]\displaystyle{ \lambda_D(y)=\lambda. }[/math]

In other words, after each random and independent displacement of points, the original Poisson point process still exists.

The displacement theorem can be extended such that the Poisson points are randomly displaced from one Euclidean space [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math] to another Euclidean space [math]\displaystyle{ \textstyle \mathbb{R}^{d'} }[/math], where [math]\displaystyle{ \textstyle d'\geq 1 }[/math] is not necessarily equal to [math]\displaystyle{ \textstyle d }[/math].[19]

Mapping

Another property that is considered useful is the ability to map a Poisson point process from one underlying space to another space.[139]

Mapping theorem

If the mapping (or transformation) adheres to some conditions, then the resulting mapped (or transformed) collection of points also form a Poisson point process, and this result is sometimes referred to as the mapping theorem.[139][140] The theorem involves some Poisson point process with mean measure [math]\displaystyle{ \textstyle \Lambda }[/math] on some underlying space. If the locations of the points are mapped (that is, the point process is transformed) according to some function to another underlying space, then the resulting point process is also a Poisson point process but with a different mean measure [math]\displaystyle{ \textstyle \Lambda' }[/math].

More specifically, one can consider a (Borel measurable) function [math]\displaystyle{ \textstyle f }[/math] that maps a point process [math]\displaystyle{ \textstyle {N} }[/math] with intensity measure [math]\displaystyle{ \textstyle \Lambda }[/math] from one space [math]\displaystyle{ \textstyle S }[/math], to another space [math]\displaystyle{ \textstyle T }[/math] in such a manner so that the new point process [math]\displaystyle{ \textstyle {N}' }[/math] has the intensity measure:

- [math]\displaystyle{ \Lambda(B)'=\Lambda(f^{-1}(B)) }[/math]

with no atoms, where [math]\displaystyle{ \textstyle B }[/math] is a Borel set and [math]\displaystyle{ \textstyle f^{-1} }[/math] denotes the inverse of the function [math]\displaystyle{ \textstyle f }[/math]. If [math]\displaystyle{ \textstyle {N} }[/math] is a Poisson point process, then the new process [math]\displaystyle{ \textstyle {N}' }[/math] is also a Poisson point process with the intensity measure [math]\displaystyle{ \textstyle \Lambda' }[/math].

Approximations with Poisson point processes

The tractability of the Poisson process means that sometimes it is convenient to approximate a non-Poisson point process with a Poisson one. The overall aim is to approximate both the number of points of some point process and the location of each point by a Poisson point process.[141] There a number of methods that can be used to justify, informally or rigorously, approximating the occurrence of random events or phenomena with suitable Poisson point processes. The more rigorous methods involve deriving upper bounds on the probability metrics between the Poisson and non-Poisson point processes, while other methods can be justified by less formal heuristics.[142]

Clumping heuristic

One method for approximating random events or phenomena with Poisson processes is called the clumping heuristic.[143] The general heuristic or principle involves using the Poisson point process (or Poisson distribution) to approximate events, which are considered rare or unlikely, of some stochastic process. In some cases these rare events are close to being independent, hence a Poisson point process can be used. When the events are not independent, but tend to occur in clusters or clumps, then if these clumps are suitably defined such that they are approximately independent of each other, then the number of clumps occurring will be close to a Poisson random variable [142] and the locations of the clumps will be close to a Poisson process.[143]

Stein's method

Stein's method is a mathematical technique originally developed for approximating random variables such as Gaussian and Poisson variables, which has also been applied to point processes. Stein's method can be used to derive upper bounds on probability metrics, which give way to quantify how different two random mathematical objects vary stochastically.[141][144] Upperbounds on probability metrics such as total variation and Wasserstein distance have been derived.[141]

Researchers have applied Stein's method to Poisson point processes in a number of ways,[141] such as using Palm calculus.[110] Techniques based on Stein's method have been developed to factor into the upper bounds the effects of certain point process operations such as thinning and superposition.[145][146] Stein's method has also been used to derive upper bounds on metrics of Poisson and other processes such as the Cox point process, which is a Poisson process with a random intensity measure.[141]

Convergence to a Poisson point process

In general, when an operation is applied to a general point process the resulting process is usually not a Poisson point process. For example, if a point process, other than a Poisson, has its points randomly and independently displaced, then the process would not necessarily be a Poisson point process. However, under certain mathematical conditions for both the original point process and the random displacement, it has been shown via limit theorems that if the points of a point process are repeatedly displaced in a random and independent manner, then the finite-distribution of the point process will converge (weakly) to that of a Poisson point process.[147]

Similar convergence results have been developed for thinning and superposition operations[147] that show that such repeated operations on point processes can, under certain conditions, result in the process converging to a Poisson point processes, provided a suitable rescaling of the intensity measure (otherwise values of the intensity measure of the resulting point processes would approach zero or infinity). Such convergence work is directly related to the results known as the Palm–Khinchin[lower-alpha 6] equations, which has its origins in the work of Conny Palm and Aleksandr Khinchin,[148] and help explains why the Poisson process can often be used as a mathematical model of various random phenomena.[147]

Generalizations of Poisson point processes

The Poisson point process can be generalized by, for example, changing its intensity measure or defining on more general mathematical spaces. These generalizations can be studied mathematically as well as used to mathematically model or represent physical phenomena.

Poisson-type random measures

The Poisson-type random measures (PT) are a family of three random counting measures which are closed under restriction to a subspace, i.e. closed under Point process operation. These random measures are examples of the mixed binomial process and share the distributional self-similarity property of the Poisson random measure. They are the only members of the canonical non-negative power series family of distributions to possess this property and include the Poisson distribution, negative binomial distribution, and binomial distribution. The Poisson random measure is independent on disjoint subspaces, whereas the other PT random measures (negative binomial and binomial) have positive and negative covariances. The PT random measures are discussed[149] and include the Poisson random measure, negative binomial random measure, and binomial random measure.

Poisson point processes on more general spaces

For mathematical models the Poisson point process is often defined in Euclidean space,[1][38] but has been generalized to more abstract spaces and plays a fundamental role in the study of random measures,[150][151] which requires an understanding of mathematical fields such as probability theory, measure theory and topology.[152]

In general, the concept of distance is of practical interest for applications, while topological structure is needed for Palm distributions, meaning that point processes are usually defined on mathematical spaces with metrics.[153] Furthermore, a realization of a point process can be considered as a counting measure, so points processes are types of random measures known as random counting measures.[117] In this context, the Poisson and other point processes have been studied on a locally compact second countable Hausdorff space.[154]

Cox point process

A Cox point process, Cox process or doubly stochastic Poisson process is a generalization of the Poisson point process by letting its intensity measure [math]\displaystyle{ \textstyle \Lambda }[/math] to be also random and independent of the underlying Poisson process. The process is named after David Cox who introduced it in 1955, though other Poisson processes with random intensities had been independently introduced earlier by Lucien Le Cam and Maurice Quenouille.[15] The intensity measure may be a realization of random variable or a random field. For example, if the logarithm of the intensity measure is a Gaussian random field, then the resulting process is known as a log Gaussian Cox process.[155] More generally, the intensity measures is a realization of a non-negative locally finite random measure. Cox point processes exhibit a clustering of points, which can be shown mathematically to be larger than those of Poisson point processes. The generality and tractability of Cox processes has resulted in them being used as models in fields such as spatial statistics[156] and wireless networks.[20]

Marked Poisson point process

For a given point process, each random point of a point process can have a random mathematical object, known as a mark, randomly assigned to it. These marks can be as diverse as integers, real numbers, lines, geometrical objects or other point processes.[157][158] The pair consisting of a point of the point process and its corresponding mark is called a marked point, and all the marked points form a marked point process.[159] It is often assumed that the random marks are independent of each other and identically distributed, yet the mark of a point can still depend on the location of its corresponding point in the underlying (state) space.[160] If the underlying point process is a Poisson point process, then the resulting point process is a marked Poisson point process.[161]

Marking theorem

If a general point process is defined on some mathematical space and the random marks are defined on another mathematical space, then the marked point process is defined on the Cartesian product of these two spaces. For a marked Poisson point process with independent and identically distributed marks, the marking theorem[160][162] states that this marked point process is also a (non-marked) Poisson point process defined on the aforementioned Cartesian product of the two mathematical spaces, which is not true for general point processes.

Compound Poisson point process

The compound Poisson point process or compound Poisson process is formed by adding random values or weights to each point of Poisson point process defined on some underlying space, so the process is constructed from a marked Poisson point process, where the marks form a collection of independent and identically distributed non-negative random variables. In other words, for each point of the original Poisson process, there is an independent and identically distributed non-negative random variable, and then the compound Poisson process is formed from the sum of all the random variables corresponding to points of the Poisson process located in some region of the underlying mathematical space.[163]

If there is a marked Poisson point process formed from a Poisson point process [math]\displaystyle{ \textstyle N }[/math] (defined on, for example, [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math]) and a collection of independent and identically distributed non-negative marks [math]\displaystyle{ \textstyle \{M_i\} }[/math] such that for each point [math]\displaystyle{ \textstyle x_i }[/math] of the Poisson process [math]\displaystyle{ \textstyle N }[/math] there is a non-negative random variable [math]\displaystyle{ \textstyle M_i }[/math], the resulting compound Poisson process is then:[164]

- [math]\displaystyle{ C(B)=\sum_{i=1}^{N(B)} M_i , }[/math]

where [math]\displaystyle{ \textstyle B\subset \mathbb{R}^d }[/math] is a Borel measurable set.

If general random variables [math]\displaystyle{ \textstyle \{M_i\} }[/math] take values in, for example, [math]\displaystyle{ \textstyle d }[/math]-dimensional Euclidean space [math]\displaystyle{ \textstyle \mathbb{R}^d }[/math], the resulting compound Poisson process is an example of a Lévy process provided that it is formed from a homogeneous Point process [math]\displaystyle{ \textstyle N }[/math] defined on the non-negative numbers [math]\displaystyle{ \textstyle [0, \infty) }[/math].[165]

Failure process with the exponential smoothing of intensity functions

The failure process with the exponential smoothing of intensity functions (FP-ESI) is an extension of the nonhomogeneous Poisson process. The intensity function of an FP-ESI is an exponential smoothing function of the intensity functions at the last time points of event occurrences and outperforms other nine stochastic processes on 8 real-world failure datasets when the models are used to fit the datasets,[166] where the model performance is measured in terms of AIC (Akaike information criterion) and BIC (Bayesian information criterion).

See also

- Boolean model (probability theory)

- Continuum percolation theory

- Compound Poisson process

- Cox process

- Point process

- Stochastic geometry

- Stochastic geometry models of wireless networks

- Markovian arrival processes

Notes

- ↑ See Section 2.3.2 of Chiu, Stoyan, Kendall, Mecke[1] or Section 1.3 of Kingman.[2]

- ↑ For example, it is possible for an event not happening in the queueing theory sense to be an event in the probability theory sense.

- ↑ Instead of [math]\displaystyle{ \textstyle \lambda(x) }[/math] and [math]\displaystyle{ \textstyle{\mathrm d}x }[/math], one could write, for example, in (two-dimensional) polar coordinates [math]\displaystyle{ \textstyle \lambda(r,\theta) }[/math] and [math]\displaystyle{ r\,dr\,d\theta }[/math] , where [math]\displaystyle{ \textstyle r }[/math] and [math]\displaystyle{ \textstyle \theta }[/math] denote the radial and angular coordinates respectively, and so [math]\displaystyle{ \textstyle{\mathrm d}x }[/math] would be an area element in this example.

- ↑ This set [math]\displaystyle{ \textstyle A }[/math] is formed by a finite number of unions, whereas a Borel set is formed by a countable number of set operations.[130]

- ↑ Kingman[137] calls this a probability density, but in other resources this is called a probability kernel.[19]

- ↑ Also spelt Palm–Khintchine in, for example, Point Processes by (Cox Isham)

References

Specific

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 Sung Nok Chiu; Dietrich Stoyan; Wilfrid S. Kendall; Joseph Mecke (27 June 2013). Stochastic Geometry and Its Applications. John Wiley & Sons. ISBN 978-1-118-65825-3. https://books.google.com/books?id=825NfM6Nc-EC.

- ↑ 2.0 2.1 2.2 2.3 2.4 J. F. C. Kingman (17 December 1992). Poisson Processes. Clarendon Press. ISBN 978-0-19-159124-2. https://books.google.com/books?id=VEiM-OtwDHkC.

- ↑ G. J. Babu and E. D. Feigelson. Spatial point processes in astronomy. Journal of statistical planning and inference, 50(3):311–326, 1996.

- ↑ H. G. Othmer, S. R. Dunbar, and W. Alt. Models of dispersal in biological systems. Journal of mathematical biology, 26(3):263–298, 1988.

- ↑ 5.0 5.1 H. Thompson. Spatial point processes, with applications to ecology. Biometrika, 42(1/2):102–115, 1955.

- ↑ C. B. Connor and B. E. Hill. Three nonhomogeneous poisson models for the probability of basaltic volcanism: application to the yucca mountain region, nevada. Journal of Geophysical Research: Solid Earth (1978–2012), 100(B6):10107–10125, 1995.

- ↑ Gardner, J. K.; Knopoff, L. (1974). "Is the sequence of earthquakes in Southern California, with aftershocks removed, Poissonian?". Bulletin of the Seismological Society of America 64 (5): 1363–1367. doi:10.1785/BSSA0640051363. Bibcode: 1974BuSSA..64.1363G. https://pubs.geoscienceworld.org/ssa/bssa/article-abstract/64/5/1363/117341/is-the-sequence-of-earthquakes-in-southern.

- ↑ J. D. Scargle. Studies in astronomical time series analysis. v. bayesian blocks, a new method to analyze structure in photon counting data. The Astrophysical Journal, 504(1):405, 1998.

- ↑ P. Aghion and P. Howitt. A Model of Growth through Creative Destruction. Econometrica, 60(2). 323–351, 1992.

- ↑ M. Bertero, P. Boccacci, G. Desidera, and G. Vicidomini. Image deblurring with poisson data: from cells to galaxies. Inverse Problems, 25(12):123006, 2009.

- ↑ "The Color of Noise". https://caseymuratori.com/blog_0010.

- ↑ 12.0 12.1 F. Baccelli and B. Błaszczyszyn. Stochastic Geometry and Wireless Networks, Volume II- Applications, volume 4, No 1–2 of Foundations and Trends in Networking. NoW Publishers, 2009.

- ↑ M. Haenggi, J. Andrews, F. Baccelli, O. Dousse, and M. Franceschetti. Stochastic geometry and random graphs for the analysis and design of wireless networks. IEEE JSAC, 27(7):1029–1046, September 2009.

- ↑ 14.00 14.01 14.02 14.03 14.04 14.05 14.06 14.07 14.08 14.09 14.10 Stirzaker, David (2000). "Advice to Hedgehogs, or, Constants Can Vary". The Mathematical Gazette 84 (500): 197–210. doi:10.2307/3621649. ISSN 0025-5572.