Mirror descent

In mathematics, mirror descent is an iterative optimization algorithm for finding a local minimum of a differentiable function.

It generalizes algorithms such as gradient descent and multiplicative weights.

History

Mirror descent was originally proposed by Nemirovski and Yudin in 1983.[1]

Motivation

In gradient descent with the sequence of learning rates applied to a differentiable function , one starts with a guess for a local minimum of and considers the sequence such that

This can be reformulated by noting that

In other words, minimizes the first-order approximation to at with added proximity term .

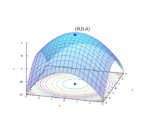

This squared Euclidean distance term is a particular example of a Bregman distance. Using other Bregman distances will yield other algorithms such as Hedge which may be more suited to optimization over particular geometries.[2][3]

Formulation

We are given convex function to optimize over a convex set , and given some norm on .

We are also given differentiable convex function , -strongly convex with respect to the given norm. This is called the distance-generating function, and its gradient is known as the mirror map.

Starting from initial , in each iteration of Mirror Descent:

- Map to the dual space:

- Update in the dual space using a gradient step:

- Map back to the primal space:

- Project back to the feasible region : , where is the Bregman divergence.

Connections with other methods and extensions

Mirror descent is related to natural gradient in information geometry and Riemannian gradient descent.[4]

Mirror descent in the online optimization setting is known as Online Mirror Descent (OMD).[5]

See also

- Gradient descent

- Multiplicative weight update method

- Hedge algorithm

- Bregman divergence

References

- ↑ Arkadi Nemirovsky and David Yudin. Problem Complexity and Method Efficiency in Optimization. John Wiley & Sons, 1983

- ↑ Nemirovski, Arkadi (2012) Tutorial: mirror descent algorithms for large-scale deterministic and stochastic convex optimization.https://www2.isye.gatech.edu/~nemirovs/COLT2012Tut.pdf

- ↑ "Mirror descent algorithm". https://tlienart.github.io/posts/2018/10/27-mirror-descent-algorithm/.

- ↑ Nielsen, Frank, "A note on the natural gradient and its connections with the Riemannian gradient, the mirror descent, and the ordinary gradient", blog, https://franknielsen.github.io/blog/NaturalGradientConnections/NaturalGradientConnections.pdf.

- ↑ Fang, Huang; Harvey, Nicholas J. A.; Portella, Victor S.; Friedlander, Michael P. (2021-09-03). "Online mirror descent and dual averaging: keeping pace in the dynamic case". arXiv:2006.02585 [cs.LG].

|