Working–Hotelling procedure

| Part of a series on |

| Regression analysis |

|---|

|

| Models |

| Estimation |

| Background |

|

|

In statistics, particularly regression analysis, the Working–Hotelling procedure, named after Holbrook Working and Harold Hotelling, is a method of simultaneous estimation in linear regression models. One of the first developments in simultaneous inference, it was devised by Working and Hotelling for the simple linear regression model in 1929.[1] It provides a confidence region for multiple mean responses, that is, it gives the upper and lower bounds of more than one value of a dependent variable at several levels of the independent variables at a certain confidence level. The resulting confidence bands are known as the Working–Hotelling–Scheffé confidence bands.

Like the closely related Scheffé's method in the analysis of variance, which considers all possible contrasts, the Working–Hotelling procedure considers all possible values of the independent variables; that is, in a particular regression model, the probability that all the Working–Hotelling confidence intervals cover the true value of the mean response is the confidence coefficient. As such, when only a small subset of the possible values of the independent variable is considered, it is more conservative and yields wider intervals than competitors like the Bonferroni correction at the same level of confidence. It outperforms the Bonferroni correction as more values are considered.

Statement

Simple linear regression

Consider a simple linear regression model , where is the response variable and the explanatory variable, and let and be the least-squares estimates of and respectively. Then the least-squares estimate of the mean response at the level is . It can then be shown, assuming that the errors independently and identically follow the normal distribution, that an confidence interval of the mean response at a certain level of is as follows:

where is the mean squared error and denotes the upper percentile of Student's t-distribution with degrees of freedom.

However, as multiple mean responses are estimated, the confidence level declines rapidly. To fix the confidence coefficient at , the Working–Hotelling approach employs an F-statistic:[2][3]

where and denotes the upper percentile of the F-distribution with degrees of freedom. The confidence level of is over all values of , i.e. .

Multiple linear regression

The Working–Hotelling confidence bands can be easily generalised to multiple linear regression. Consider a general linear model as defined in the linear regressions article, that is,

where

Again, it can be shown that the least-squares estimate of the mean response is , where consists of least-square estimates of the entries in , i.e. . Likewise, it can be shown that a confidence interval for a single mean response estimate is as follows:[4]

where is the observed value of the mean squared error .

The Working–Hotelling approach to multiple estimations is similar to that of simple linear regression, with only a change in the degrees of freedom:[3]

where .

Graphical representation

In the simple linear regression case, Working–Hotelling–Scheffé confidence bands, drawn by connecting the upper and lower limits of the mean response at every level, take the shape of hyperbolas. In drawing, they are sometimes approximated by the Graybill–Bowden confidence bands, which are linear and hence easier to graph:[2]

where denotes the upper percentile of the Studentized maximum modulus distribution with two means and degrees of freedom.

Numerical example

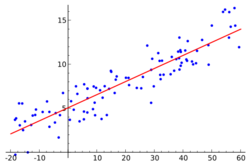

The same data in ordinary least squares are utilised in this example:

Height (m) 1.47 1.50 1.52 1.55 1.57 1.60 1.63 1.65 1.68 1.70 1.73 1.75 1.78 1.80 1.83 Weight (kg) 52.21 53.12 54.48 55.84 57.20 58.57 59.93 61.29 63.11 64.47 66.28 68.10 69.92 72.19 74.46

A simple linear regression model is fit to this data. The values of and have been found to be −39.06 and 61.27 respectively. The goal is to estimate the mean mass of women given their heights at the 95% confidence level. The value of was found to be . It was also found that , , and . Then, to predict the mean mass of all women of a particular height, the following Working–Hotelling–Scheffé band has been derived:

which results in the graph on the left.

Comparison with other methods

The Working–Hotelling approach may give tighter or looser confidence limits compared to the Bonferroni correction. In general, for small families of statements, the Bonferroni bounds may be tighter, but when the number of estimated values increases, the Working–Hotelling procedure will yield narrower limits. This is because the confidence level of Working–Hotelling–Scheffé bounds is exactly when all values of the independent variables, i.e. , are considered. Alternatively, from an algebraic perspective, the critical value remains constant as the number estimates of increases, whereas the corresponding values in Bonferonni estimates, , will be increasingly divergent as the number of estimates increases. Therefore, the Working–Hotelling method is more suited for large-scale comparisons, whereas Bonferroni is preferred if only a few mean responses are to be estimated. In practice, both methods are usually used first and the narrower interval chosen.[4]

Another alternative to the Working–Hotelling–Scheffé band is the Gavarian band, which is used when a confidence band is needed that maintains equal widths at all levels.[5]

The Working–Hotelling procedure is based on the same principles as Scheffé's method, which gives family confidence intervals for all possible contrasts.[6] Their proofs are almost identical.[5] This is because both methods estimate linear combinations of mean response at all factor levels. However, the Working–Hotelling procedure does not deal with contrasts but with different levels of the independent variable, so there is no requirement that the coefficients of the parameters sum up to zero. Therefore, it has one more degree of freedom.[6]

See also

- Multiple comparisons

Footnotes

Bibliography

- Graybill, Franklin A.; Bowden, David C. (1967-06-01). "Linear Segment Confidence Bands for Simple Linear Models". Journal of the American Statistical Association 62 (318): 403–408. doi:10.1080/01621459.1967.10482917. ISSN 0162-1459.

- Miller, Rupert G. (1966). Simultaneous Statistical Inference. New York: Springer-Verlag. ISBN 978-1-4613-8124-2.

- Miller, R. (2014). "Multiple Comparisons I". Encyclopedia of Statistical Sciences. doi:10.1002/0471667196. ISBN 9780471667193. http://onlinelibrary.wiley.com/book/10.1002/0471667196;jsessionid=52A4434648CAAFAC4B5CF6F525F38E57.f04t04.

- Neter, John; Wasserman, William; Kutner, Michael (1990). Applied Linear Statistical Models. Tokyo: Richard D Irwin, Inc.. ISBN 978-0-256-08338-5.

- Westfall, Peter H; Tobias, R D; Wolfinger, Russell Dean (2011). Multiple comparisons and multiple tests using SAS. Cary, N.C.: SAS Pub.. ISBN 9781607648857.

- Working, Holbrook; Hotelling, Harold (1929-03-01). "Applications of the Theory of Error to the Interpretation of Trends". Journal of the American Statistical Association 24 (165A): 73–85. doi:10.1080/01621459.1929.10506274. ISSN 0162-1459.

|