Nonlinear conjugate gradient method

In numerical optimization, the nonlinear conjugate gradient method generalizes the conjugate gradient method to nonlinear optimization. For a quadratic function [math]\displaystyle{ \displaystyle f(x) }[/math]

- [math]\displaystyle{ \displaystyle f(x)=\|Ax-b\|^2, }[/math]

the minimum of [math]\displaystyle{ f }[/math] is obtained when the gradient is 0:

- [math]\displaystyle{ \nabla_x f=2 A^T(Ax-b)=0 }[/math].

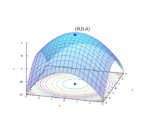

Whereas linear conjugate gradient seeks a solution to the linear equation [math]\displaystyle{ \displaystyle A^T Ax=A^T b }[/math], the nonlinear conjugate gradient method is generally used to find the local minimum of a nonlinear function using its gradient [math]\displaystyle{ \nabla_x f }[/math] alone. It works when the function is approximately quadratic near the minimum, which is the case when the function is twice differentiable at the minimum and the second derivative is non-singular there.

Given a function [math]\displaystyle{ \displaystyle f(x) }[/math] of [math]\displaystyle{ N }[/math] variables to minimize, its gradient [math]\displaystyle{ \nabla_x f }[/math] indicates the direction of maximum increase. One simply starts in the opposite (steepest descent) direction:

- [math]\displaystyle{ \Delta x_0=-\nabla_x f (x_0) }[/math]

with an adjustable step length [math]\displaystyle{ \displaystyle \alpha }[/math] and performs a line search in this direction until it reaches the minimum of [math]\displaystyle{ \displaystyle f }[/math]:

- [math]\displaystyle{ \displaystyle \alpha_0:= \arg \min_\alpha f(x_0+\alpha \Delta x_0) }[/math],

- [math]\displaystyle{ \displaystyle x_1=x_0+\alpha_0 \Delta x_0 }[/math]

After this first iteration in the steepest direction [math]\displaystyle{ \displaystyle \Delta x_0 }[/math], the following steps constitute one iteration of moving along a subsequent conjugate direction [math]\displaystyle{ \displaystyle s_n }[/math], where [math]\displaystyle{ \displaystyle s_0=\Delta x_0 }[/math]:

- Calculate the steepest direction: [math]\displaystyle{ \Delta x_n=-\nabla_x f (x_n) }[/math],

- Compute [math]\displaystyle{ \displaystyle \beta_n }[/math] according to one of the formulas below,

- Update the conjugate direction: [math]\displaystyle{ \displaystyle s_n=\Delta x_n+\beta_n s_{n-1} }[/math]

- Perform a line search: optimize [math]\displaystyle{ \displaystyle \alpha_n=\arg \min_{\alpha} f(x_n+\alpha s_n) }[/math],

- Update the position: [math]\displaystyle{ \displaystyle x_{n+1}=x_{n}+\alpha_{n} s_{n} }[/math],

With a pure quadratic function the minimum is reached within N iterations (excepting roundoff error), but a non-quadratic function will make slower progress. Subsequent search directions lose conjugacy requiring the search direction to be reset to the steepest descent direction at least every N iterations, or sooner if progress stops. However, resetting every iteration turns the method into steepest descent. The algorithm stops when it finds the minimum, determined when no progress is made after a direction reset (i.e. in the steepest descent direction), or when some tolerance criterion is reached.

Within a linear approximation, the parameters [math]\displaystyle{ \displaystyle \alpha }[/math] and [math]\displaystyle{ \displaystyle \beta }[/math] are the same as in the linear conjugate gradient method but have been obtained with line searches. The conjugate gradient method can follow narrow (ill-conditioned) valleys, where the steepest descent method slows down and follows a criss-cross pattern.

Four of the best known formulas for [math]\displaystyle{ \displaystyle \beta_n }[/math] are named after their developers:

- Fletcher–Reeves:[1]

- [math]\displaystyle{ \beta_{n}^{FR} = \frac{\Delta x_n^T \Delta x_n} {\Delta x_{n-1}^T \Delta x_{n-1}}. }[/math]

- Polak–Ribière:[2]

- [math]\displaystyle{ \beta_{n}^{PR} = \frac{\Delta x_n^T (\Delta x_n-\Delta x_{n-1})} {\Delta x_{n-1}^T \Delta x_{n-1}}. }[/math]

- Hestenes-Stiefel:[3]

- [math]\displaystyle{ \beta_n^{HS} = \frac{\Delta x_n^T (\Delta x_n-\Delta x_{n-1})} {-s_{n-1}^T (\Delta x_n-\Delta x_{n-1})}. }[/math]

- Dai–Yuan:[4]

- [math]\displaystyle{ \beta_{n}^{DY} = \frac{\Delta x_n^T \Delta x_n} {-s_{n-1}^T (\Delta x_n-\Delta x_{n-1})}. }[/math].

These formulas are equivalent for a quadratic function, but for nonlinear optimization the preferred formula is a matter of heuristics or taste. A popular choice is [math]\displaystyle{ \displaystyle \beta=\max\{0, \beta^{PR}\} }[/math], which provides a direction reset automatically.[5]

Algorithms based on Newton's method potentially converge much faster. There, both step direction and length are computed from the gradient as the solution of a linear system of equations, with the coefficient matrix being the exact Hessian matrix (for Newton's method proper) or an estimate thereof (in the quasi-Newton methods, where the observed change in the gradient during the iterations is used to update the Hessian estimate). For high-dimensional problems, the exact computation of the Hessian is usually prohibitively expensive, and even its storage can be problematic, requiring [math]\displaystyle{ O(N^2) }[/math] memory (but see the limited-memory L-BFGS quasi-Newton method).

The conjugate gradient method can also be derived using optimal control theory.[6] In this accelerated optimization theory, the conjugate gradient method falls out as a nonlinear optimal feedback controller,

[math]\displaystyle{ u = k(x, \dot x):= -\gamma_a \nabla_x f(x) - \gamma_b \dot x }[/math]for the double integrator system,

[math]\displaystyle{ \ddot x = u }[/math]

The quantities [math]\displaystyle{ \gamma_a \gt 0 }[/math] and [math]\displaystyle{ \gamma_b \gt 0 }[/math] are variable feedback gains.[6]

See also

- Gradient descent

- Broyden–Fletcher–Goldfarb–Shanno algorithm

- Conjugate gradient method

- L-BFGS (limited memory BFGS)

- Nelder–Mead method

- Wolfe conditions

References

- ↑ Fletcher, R.; Reeves, C. M. (1964). "Function minimization by conjugate gradients". The Computer Journal 7 (2): 149–154. doi:10.1093/comjnl/7.2.149.

- ↑ Polak, E.; Ribière, G. (1969). "Note sur la convergence de méthodes de directions conjuguées". Revue Française d'Automatique, Informatique, Recherche Opérationnelle 3 (1): 35–43.

- ↑ Hestenes, M. R.; Stiefel, E. (1952). "Methods of Conjugate Gradients for Solving Linear Systems". Journal of Research of the National Bureau of Standards 49 (6): 409–436. doi:10.6028/jres.049.044.

- ↑ Dai, Y.-H.; Yuan, Y. (1999). "A nonlinear conjugate gradient method with a strong global convergence property". SIAM Journal on Optimization 10 (1): 177–182. doi:10.1137/S1052623497318992.

- ↑ Shewchuk, J. R. (August 1994). "An Introduction to the Conjugate Gradient Method Without the Agonizing Pain". https://www.cs.cmu.edu/~quake-papers/painless-conjugate-gradient.pdf.

- ↑ 6.0 6.1 Ross, I. M. (2019). "An Optimal Control Theory for Accelerated Optimization". arXiv:1902.09004 [math.OC].

|