Evolutionary multimodal optimization

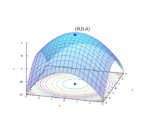

In applied mathematics, multimodal optimization deals with optimization tasks that involve finding all or most of the multiple (at least locally optimal) solutions of a problem, as opposed to a single best solution. Evolutionary multimodal optimization is a branch of evolutionary computation, which is closely related to machine learning. Wong provides a short survey,[1] wherein the chapter of Shir[2] and the book of Preuss[3] cover the topic in more detail.

Motivation

Knowledge of multiple solutions to an optimization task is especially helpful in engineering, when due to physical (and/or cost) constraints, the best results may not always be realizable. In such a scenario, if multiple solutions (locally and/or globally optimal) are known, the implementation can be quickly switched to another solution and still obtain the best possible system performance. Multiple solutions could also be analyzed to discover hidden properties (or relationships) of the underlying optimization problem, which makes them important for obtaining domain knowledge. In addition, the algorithms for multimodal optimization usually not only locate multiple optima in a single run, but also preserve their population diversity, resulting in their global optimization ability on multimodal functions. Moreover, the techniques for multimodal optimization are usually borrowed as diversity maintenance techniques to other problems.[4][5]

Background

Classical techniques of optimization would need multiple restart points and multiple runs in the hope that a different solution may be discovered every run, with no guarantee however. Evolutionary algorithms (EAs) due to their population based approach, provide a natural advantage over classical optimization techniques. They maintain a population of possible solutions, which are processed every generation, and if the multiple solutions can be preserved over all these generations, then at termination of the algorithm we will have multiple good solutions, rather than only the best solution. Note that this is against the natural tendency of classical optimization techniques, which will always converge to the best solution, or a sub-optimal solution (in a rugged, “badly behaving” function). Finding and maintenance of multiple solutions is wherein lies the challenge of using EAs for multi-modal optimization. Niching[6] is a generic term referred to as the technique of finding and preserving multiple stable niches, or favorable parts of the solution space possibly around multiple solutions, so as to prevent convergence to a single solution.

The field of Evolutionary algorithms encompasses genetic algorithms (GAs), evolution strategy (ES), differential evolution (DE), particle swarm optimization (PSO), and other methods. Attempts have been made to solve multi-modal optimization in all these realms and most, if not all the various methods implement niching in some form or the other.

Multimodal optimization using genetic algorithms/evolution strategies

De Jong's crowding method, Goldberg's sharing function approach, Petrowski's clearing method, restricted mating, maintaining multiple subpopulations are some of the popular approaches that have been proposed by the community. The first two methods are especially well studied, however, they do not perform explicit separation into solutions belonging to different basins of attraction.

The application of multimodal optimization within ES was not explicit for many years, and has been explored only recently. A niching framework utilizing derandomized ES was introduced by Shir,[7] proposing the CMA-ES as a niching optimizer for the first time. The underpinning of that framework was the selection of a peak individual per subpopulation in each generation, followed by its sampling to produce the consecutive dispersion of search-points. The biological analogy of this machinery is an alpha-male winning all the imposed competitions and dominating thereafter its ecological niche, which then obtains all the sexual resources therein to generate its offspring.

Recently, an evolutionary multiobjective optimization (EMO) approach was proposed,[8] in which a suitable second objective is added to the originally single objective multimodal optimization problem, so that the multiple solutions form a weak pareto-optimal front. Hence, the multimodal optimization problem can be solved for its multiple solutions using an EMO algorithm. Improving upon their work,[9] the same authors have made their algorithm self-adaptive, thus eliminating the need for pre-specifying the parameters.

An approach that does not use any radius for separating the population into subpopulations (or species) but employs the space topology instead is proposed in.[10]

References

- ↑ Wong, K. C. (2015), Evolutionary Multimodal Optimization: A Short Survey arXiv preprint arXiv:1508.00457

- ↑ Shir, O.M. (2012), Niching in Evolutionary Algorithms

- ↑ Preuss, Mike (2015), Multimodal Optimization by Means of Evolutionary Algorithms

- ↑ Wong, K. C. et al. (2012), Evolutionary multimodal optimization using the principle of locality Information Sciences

- ↑ Jiang, Yi; Zhan, Zhi-Hui; Tan, Kay Chen; Zhang, Jun (April 2023). "Optimizing Niche Center for Multimodal Optimization Problems". IEEE Transactions on Cybernetics 53 (4): 2544–2557. doi:10.1109/TCYB.2021.3125362. ISSN 2168-2267. PMID 34919526.

- ↑ Mahfoud, S. W. (1995), "Niching methods for genetic algorithms"

- ↑ Shir, O.M. (2008), "Niching in Derandomized Evolution Strategies and its Applications in Quantum Control"

- ↑ Deb, K., Saha, A. (2010) "Finding Multiple Solutions for Multimodal Optimization Problems Using a Multi-Objective Evolutionary Approach" (GECCO 2010, In press)

- ↑ Saha, A., Deb, K. (2010) "A Bi-criterion Approach to Multimodal Optimization: Self-adaptive Approach " (Lecture Notes in Computer Science, 2010, Volume 6457/2010, 95–104)

- ↑ C. Stoean, M. Preuss, R. Stoean, D. Dumitrescu (2010) Multimodal Optimization by means of a Topological Species Conservation Algorithm. In IEEE Transactions on Evolutionary Computation, Vol. 14, Issue 6, pages 842–864, 2010.

Bibliography

- D. Goldberg and J. Richardson. (1987) "Genetic algorithms with sharing for multimodal function optimization". In Proceedings of the Second International Conference on Genetic Algorithms on Genetic algorithms and their application table of contents, pages 41–49. L. Erlbaum Associates Inc. Hillsdale, NJ, USA, 1987.

- A. Petrowski. (1996) "A clearing procedure as a niching method for genetic algorithms". In Proceedings of the 1996 IEEE International Conference on Evolutionary Computation, pages 798–803. Citeseer, 1996.

- Deb, K., (2001) "Multi-objective Optimization using Evolutionary Algorithms", Wiley (Google Books)

- F. Streichert, G. Stein, H. Ulmer, and A. Zell. (2004) "A clustering based niching EA for multimodal search spaces". Lecture Notes in Computer Science, pages 293–304, 2004.

- Singh, G., Deb, K., (2006) "Comparison of multi-modal optimization algorithms based on evolutionary algorithms". In Proceedings of the 8th annual conference on Genetic and evolutionary computation, pages 8–12. ACM, 2006.

- Ronkkonen, J., (2009). Continuous Multimodal Global Optimization with Differential Evolution Based Methods

- Wong, K. C., (2009). An evolutionary algorithm with species-specific explosion for multimodal optimization. GECCO 2009: 923–930

- J. Barrera and C. A. C. Coello. "A Review of Particle Swarm Optimization Methods used for Multimodal Optimization", pages 9–37. Springer, Berlin, November 2009.

- Wong, K. C., (2010). Effect of Spatial Locality on an Evolutionary Algorithm for Multimodal Optimization. EvoApplications (1) 2010: 481–490

- Deb, K., Saha, A. (2010) Finding Multiple Solutions for Multimodal Optimization Problems Using a Multi-Objective Evolutionary Approach. GECCO 2010: 447–454

- Wong, K. C., (2010). Protein structure prediction on a lattice model via multimodal optimization techniques. GECCO 2010: 155–162

- Saha, A., Deb, K. (2010), A Bi-criterion Approach to Multimodal Optimization: Self-adaptive Approach. SEAL 2010: 95–104

- Shir, O.M., Emmerich, M., Bäck, T. (2010), Adaptive Niche Radii and Niche Shapes Approaches for Niching with the CMA-ES. Evolutionary Computation Vol. 18, No. 1, pp. 97-126.

- C. Stoean, M. Preuss, R. Stoean, D. Dumitrescu (2010) Multimodal Optimization by means of a Topological Species Conservation Algorithm. In IEEE Transactions on Evolutionary Computation, Vol. 14, Issue 6, pages 842–864, 2010.

- S. Das, S. Maity, B-Y Qu, P. N. Suganthan, "Real-parameter evolutionary multimodal optimization — A survey of the state-of-the-art", Vol. 1, No. 2, pp. 71–88, Swarm and Evolutionary Computation, June 2011.

External links

- Multi-modal optimization using Particle Swarm Optimization (PSO)

- Niching in Evolution Strategies (ES)

- Multimodal optimization page at Chair 11, Computer Science, TU Dortmund University

- IEEE CIS Task Force on Multi-modal Optimization

|