Powell's dog leg method

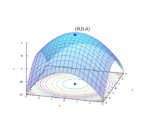

Powell's dog leg method, also called Powell's hybrid method, is an iterative optimisation algorithm for the solution of non-linear least squares problems, introduced in 1970 by Michael J. D. Powell.[1] Similarly to the Levenberg–Marquardt algorithm, it combines the Gauss–Newton algorithm with gradient descent, but it uses an explicit trust region. At each iteration, if the step from the Gauss–Newton algorithm is within the trust region, it is used to update the current solution. If not, the algorithm searches for the minimum of the objective function along the steepest descent direction, known as Cauchy point. If the Cauchy point is outside of the trust region, it is truncated to the boundary of the latter and it is taken as the new solution. If the Cauchy point is inside the trust region, the new solution is taken at the intersection between the trust region boundary and the line joining the Cauchy point and the Gauss-Newton step (dog leg step).[2] The name of the method derives from the resemblance between the construction of the dog leg step and the shape of a dogleg hole in golf.[2]

Formulation

Given a least squares problem in the form

with , Powell's dog leg method finds the optimal point by constructing a sequence that converges to . At a given iteration, the Gauss–Newton step is given by

where is the Jacobian matrix, while the steepest descent direction is given by

The objective function is linearised along the steepest descent direction

To compute the value of the parameter at the Cauchy point, the derivative of the last expression with respect to is imposed to be equal to zero, giving

Given a trust region of radius , Powell's dog leg method selects the update step as equal to:

- , if the Gauss–Newton step is within the trust region ();

- if both the Gauss–Newton and the steepest descent steps are outside the trust region ();

- with such that , if the Gauss–Newton step is outside the trust region but the steepest descent step is inside (dog leg step).[1]

References

Sources

- Lourakis, M.L.A.; Argyros, A.A. (2005). "Is Levenberg-Marquardt the most efficient optimization algorithm for implementing bundle adjustment?". Tenth IEEE International Conference on Computer Vision (ICCV'05) Volume 1. pp. 1526-1531. doi:10.1109/ICCV.2005.128. ISBN 0-7695-2334-X.

- Yuan, Ya-xiang (2000). "A review of trust region algorithms for optimization". 99.

- Powell, M.J.D. (1970). "A new algorithm for unconstrained optimization". in Rosen, J.B.; Mangasarian, O.L.; Ritter, K.. Nonlinear Programming. New York: Academic Press. pp. 31–66.

- Powell, M.J.D. (1970). "A hybrid method for nonlinear equations". in Robinowitz, P.. Numerical Methods for Nonlinear Algebraic Equations. London: Gordon and Breach Science. pp. 87–144.

External links

- "Equation Solving Algorithms". MathWorks. https://mathworks.com/help/optim/ug/equation-solving-algorithms.html.

|