Jackknife resampling

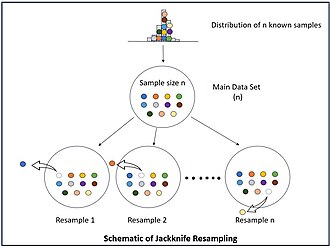

In statistics, the jackknife (jackknife cross-validation) is a cross-validation technique and, therefore, a form of resampling. It is especially useful for bias and variance estimation. The jackknife pre-dates other common resampling methods such as the bootstrap. Given a sample of size [math]\displaystyle{ n }[/math], a jackknife estimator can be built by aggregating the parameter estimates from each subsample of size [math]\displaystyle{ (n-1) }[/math] obtained by omitting one observation.[1]

The jackknife technique was developed by Maurice Quenouille (1924–1973) from 1949 and refined in 1956. John Tukey expanded on the technique in 1958 and proposed the name "jackknife" because, like a physical jack-knife (a compact folding knife), it is a rough-and-ready tool that can improvise a solution for a variety of problems even though specific problems may be more efficiently solved with a purpose-designed tool.[2]

The jackknife is a linear approximation of the bootstrap.[2]

A simple example: mean estimation

The jackknife estimator of a parameter is found by systematically leaving out each observation from a dataset and calculating the parameter estimate over the remaining observations and then aggregating these calculations.

For example, if the parameter to be estimated is the population mean of random variable [math]\displaystyle{ x }[/math], then for a given set of i.i.d. observations [math]\displaystyle{ x_1, ..., x_n }[/math] the natural estimator is the sample mean:

- [math]\displaystyle{ \bar{x} =\frac{1}{n} \sum_{i=1}^{n} x_i =\frac{1}{n} \sum_{i \in [n]} x_i, }[/math]

where the last sum used another way to indicate that the index [math]\displaystyle{ i }[/math] runs over the set [math]\displaystyle{ [n] = \{ 1,\ldots,n\} }[/math].

Then we proceed as follows: For each [math]\displaystyle{ i \in [n] }[/math] we compute the mean [math]\displaystyle{ \bar{x}_{(i)} }[/math] of the jackknife subsample consisting of all but the [math]\displaystyle{ i }[/math]-th data point, and this is called the [math]\displaystyle{ i }[/math]-th jackknife replicate:

- [math]\displaystyle{ \bar{x}_{(i)} =\frac{1}{n-1} \sum_{j \in [n], j\ne i} x_j, \quad \quad i=1, \dots ,n. }[/math]

It could help to think that these [math]\displaystyle{ n }[/math] jackknife replicates [math]\displaystyle{ \bar{x}_{(1)},\ldots,\bar{x}_{(n)} }[/math] give us an approximation of the distribution of the sample mean [math]\displaystyle{ \bar{x} }[/math] and the larger the [math]\displaystyle{ n }[/math] the better this approximation will be. Then finally to get the jackknife estimator we take the average of these [math]\displaystyle{ n }[/math] jackknife replicates:

- [math]\displaystyle{ \bar{x}_{\mathrm{jack}} = \frac{1}{n}\sum_{i=1}^n \bar{x}_{(i)}. }[/math]

One may ask about the bias and the variance of [math]\displaystyle{ \bar{x}_{\mathrm{jack}} }[/math]. From the definition of [math]\displaystyle{ \bar{x}_{\mathrm{jack}} }[/math] as the average of the jackknife replicates one could try to calculate explicitly, and the bias is a trivial calculation but the variance of [math]\displaystyle{ \bar{x}_{\mathrm{jack}} }[/math] is more involved since the jackknife replicates are not independent.

For the special case of the mean, one can show explicitly that the jackknife estimate equals the usual estimate:

- [math]\displaystyle{ \frac{1}{n}\sum_{i=1}^n \bar{x}_{(i)} = \bar{x}. }[/math]

This establishes the identity [math]\displaystyle{ \bar{x}_{\mathrm{jack}} = \bar{x} }[/math]. Then taking expectations we get [math]\displaystyle{ E[\bar{x}_{\mathrm{jack}}] = E[\bar{x}] =E[x] }[/math], so [math]\displaystyle{ \bar{x}_{\mathrm{jack}} }[/math] is unbiased, while taking variance we get [math]\displaystyle{ V[\bar{x}_{\mathrm{jack}}] = V[\bar{x}] =V[x]/n }[/math]. However, these properties do not hold in general for other parameters than the mean.

This simple example for the case of mean estimation is just to illustrate the construction of a jackknife estimator, while the real subtleties (and the usefulness) emerge for the case of estimating other parameters, such as higher moments than the mean or other functionals of the distribution.

[math]\displaystyle{ \bar{x}_{\mathrm{jack}} }[/math] could be used to construct an empirical estimate of the bias of [math]\displaystyle{ \bar{x} }[/math], namely [math]\displaystyle{ \widehat{\operatorname{bias}}(\bar{x})_{\mathrm{jack}} = c(\bar{x}_{\mathrm{jack}} - \bar{x}) }[/math] with some suitable factor [math]\displaystyle{ c\gt 0 }[/math], although in this case we know that [math]\displaystyle{ \bar{x}_{\mathrm{jack}} = \bar{x} }[/math] so this construction does not add any meaningful knowledge, but it gives the correct estimation of the bias (which is zero).

A jackknife estimate of the variance of [math]\displaystyle{ \bar{x} }[/math] can be calculated from the variance of the jackknife replicates [math]\displaystyle{ \bar{x}_{(i)} }[/math]:[3][4]

- [math]\displaystyle{ \widehat{\operatorname{var}}(\bar{x})_{\mathrm{jack}} =\frac{n-1}{n} \sum_{i=1}^n (\bar{x}_{(i)} - \bar{x}_{\mathrm{jack}})^2 =\frac{1}{n(n-1)} \sum_{i=1}^n (x_i - \bar{x})^2. }[/math]

The left equality defines the estimator [math]\displaystyle{ \widehat{\operatorname{var}}(\bar{x})_{\mathrm{jack}} }[/math] and the right equality is an identity that can be verified directly. Then taking expectations we get [math]\displaystyle{ E[\widehat{\operatorname{var}}(\bar{x})_{\mathrm{jack}}] = V[x]/n = V[\bar{x}] }[/math], so this is an unbiased estimator of the variance of [math]\displaystyle{ \bar{x} }[/math].

Estimating the bias of an estimator

The jackknife technique can be used to estimate (and correct) the bias of an estimator calculated over the entire sample.

Suppose [math]\displaystyle{ \theta }[/math] is the target parameter of interest, which is assumed to be some functional of the distribution of [math]\displaystyle{ x }[/math]. Based on a finite set of observations [math]\displaystyle{ x_1, ..., x_n }[/math], which is assumed to consist of i.i.d. copies of [math]\displaystyle{ x }[/math], the estimator [math]\displaystyle{ \hat{\theta} }[/math] is constructed:

- [math]\displaystyle{ \hat{\theta} =f_n(x_1,\ldots,x_n). }[/math]

The value of [math]\displaystyle{ \hat{\theta} }[/math] is sample-dependent, so this value will change from one random sample to another.

By definition, the bias of [math]\displaystyle{ \hat{\theta} }[/math] is as follows:

- [math]\displaystyle{ \text{bias}(\hat{\theta}) = E[\hat{\theta}] - \theta. }[/math]

One may wish to compute several values of [math]\displaystyle{ \hat{\theta} }[/math] from several samples, and average them, to calculate an empirical approximation of [math]\displaystyle{ E[\hat{\theta}] }[/math], but this is impossible when there are no "other samples" when the entire set of available observations [math]\displaystyle{ x_1, ..., x_n }[/math] was used to calculate [math]\displaystyle{ \hat{\theta} }[/math]. In this kind of situation the jackknife resampling technique may be of help.

We construct the jackknife replicates:

- [math]\displaystyle{ \hat{\theta}_{(1)} =f_{n-1}(x_{2},x_{3}\ldots,x_{n}) }[/math]

- [math]\displaystyle{ \hat{\theta}_{(2)} =f_{n-1}(x_{1},x_{3},\ldots,x_{n}) }[/math]

- [math]\displaystyle{ \vdots }[/math]

- [math]\displaystyle{ \hat{\theta}_{(n)} =f_{n-1}(x_1,x_{2},\ldots,x_{n-1}) }[/math]

where each replicate is a "leave-one-out" estimate based on the jackknife subsample consisting of all but one of the data points:

- [math]\displaystyle{ \hat{\theta}_{(i)} =f_{n-1}(x_{1},\ldots,x_{i-1},x_{i+1},\ldots,x_{n}) \quad \quad i=1, \dots,n. }[/math]

Then we define their average:

- [math]\displaystyle{ \hat{\theta}_\mathrm{jack}=\frac{1}{n} \sum_{i=1}^n \hat{\theta}_{(i)} }[/math]

The jackknife estimate of the bias of [math]\displaystyle{ \hat{\theta} }[/math] is given by:

- [math]\displaystyle{ \widehat{\text{bias}}(\hat{\theta})_\mathrm{jack} =(n-1)(\hat{\theta}_\mathrm{jack} - \hat{\theta}) }[/math]

and the resulting bias-corrected jackknife estimate of [math]\displaystyle{ \theta }[/math] is given by:

- [math]\displaystyle{ \hat{\theta}_{\text{jack}}^{*} =\hat{\theta} - \widehat{\text{bias}}(\hat{\theta})_\mathrm{jack} =n\hat{\theta} - (n-1)\hat{\theta}_\mathrm{jack}. }[/math]

This removes the bias in the special case that the bias is [math]\displaystyle{ O(n^{-1}) }[/math] and reduces it to [math]\displaystyle{ O(n^{-2}) }[/math] in other cases.[2]

Estimating the variance of an estimator

The jackknife technique can be also used to estimate the variance of an estimator calculated over the entire sample.

See also

Literature

- Berger, Y.G. (2007). "A jackknife variance estimator for unistage stratified samples with unequal probabilities". Biometrika 94 (4): 953–964. doi:10.1093/biomet/asm072.

- Berger, Y.G.; Rao, J.N.K. (2006). "Adjusted jackknife for imputation under unequal probability sampling without replacement". Journal of the Royal Statistical Society, Series B 68 (3): 531–547. doi:10.1111/j.1467-9868.2006.00555.x.

- Berger, Y.G.; Skinner, C.J. (2005). "A jackknife variance estimator for unequal probability sampling". Journal of the Royal Statistical Society, Series B 67 (1): 79–89. doi:10.1111/j.1467-9868.2005.00489.x.

- Jiang, J.; Lahiri, P.; Wan, S-M. (2002). "A unified jackknife theory for empirical best prediction with M-estimation". The Annals of Statistics 30 (6): 1782–810. doi:10.1214/aos/1043351257.

- Jones, H.L. (1974). "Jackknife estimation of functions of stratum means". Biometrika 61 (2): 343–348. doi:10.2307/2334363.

- Kish, L.; Frankel, M.R. (1974). "Inference from complex samples". Journal of the Royal Statistical Society, Series B 36 (1): 1–37.

- Krewski, D.; Rao, J.N.K. (1981). "Inference from stratified samples: properties of the linearization, jackknife and balanced repeated replication methods". The Annals of Statistics 9 (5): 1010–1019. doi:10.1214/aos/1176345580.

- Quenouille, M.H. (1956). "Notes on bias in estimation". Biometrika 43 (3–4): 353–360. doi:10.1093/biomet/43.3-4.353.

- Rao, J.N.K.; Shao, J. (1992). "Jackknife variance estimation with survey data under hot deck imputation". Biometrika 79 (4): 811–822. doi:10.1093/biomet/79.4.811.

- Rao, J.N.K.; Wu, C.F.J.; Yue, K. (1992). "Some recent work on resampling methods for complex surveys". Survey Methodology 18 (2): 209–217.

- Shao, J. and Tu, D. (1995). The Jackknife and Bootstrap. Springer-Verlag, Inc.

- Tukey, J.W. (1958). "Bias and confidence in not-quite large samples (abstract)". The Annals of Mathematical Statistics 29 (2): 614.

- Wu, C.F.J. (1986). "Jackknife, Bootstrap and other resampling methods in regression analysis". The Annals of Statistics 14 (4): 1261–1295. doi:10.1214/aos/1176350142. https://projecteuclid.org/journals/annals-of-statistics/volume-14/issue-4/Jackknife-Bootstrap-and-Other-Resampling-Methods-in-Regression-Analysis/10.1214/aos/1176350142.full.

Notes

- ↑ Efron 1982, p. 2.

- ↑ 2.0 2.1 2.2 Cameron & Trivedi 2005, p. 375.

- ↑ Efron 1982, p. 14.

- ↑ McIntosh, Avery I.. "The Jackknife Estimation Method". Avery I. McIntosh. http://people.bu.edu/aimcinto/jackknife.pdf.: p. 3.

References

- Cameron, Adrian; Trivedi, Pravin K. (2005). Microeconometrics : methods and applications. Cambridge New York: Cambridge University Press. ISBN 9780521848053.

- Efron, Bradley; Stein, Charles (May 1981). "The Jackknife Estimate of Variance". The Annals of Statistics 9 (3): 586–596. doi:10.1214/aos/1176345462.

- Efron, Bradley (1982). The jackknife, the bootstrap, and other resampling plans. Philadelphia, PA: Society for Industrial and Applied Mathematics. ISBN 9781611970319.

- Quenouille, Maurice H. (September 1949). "Problems in Plane Sampling". The Annals of Mathematical Statistics 20 (3): 355–375. doi:10.1214/aoms/1177729989.

- Quenouille, Maurice H. (1956). "Notes on Bias in Estimation". Biometrika 43 (3-4): 353–360. doi:10.1093/biomet/43.3-4.353.

- Tukey, John W. (1958). "Bias and confidence in not quite large samples (abstract)". The Annals of Mathematical Statistics 29 (2): 614. doi:10.1214/aoms/1177706647.

|